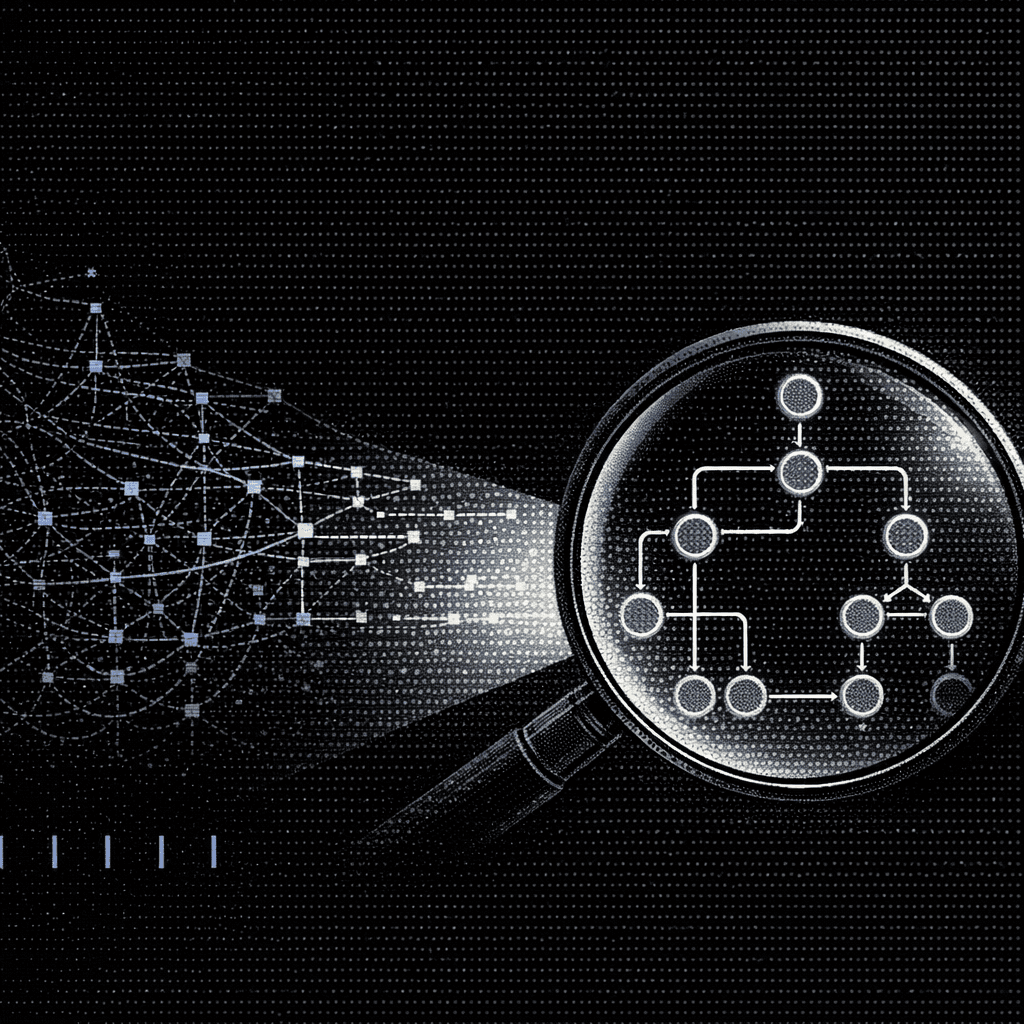

AI code review tools for large codebases confront enterprises with a blunt reality: when you're inspecting hundreds of thousands of lines of code, architectural-level visibility is not optional. Gartner predicts a staggering 2,500% rise in software defects by 2028 for organizations that jump from prompt to production without strong governance. That forecast zeroes in on teams managing 500K+ line-of-code repositories, where most AI code review platforms simply can't ingest enough context. Mainstream assistants max out at context windows that cover barely 20% of a typical enterprise monorepo, missing the cross-service dependencies that spark outages. Today, only purpose-built solutions such as Augment Code openly claim the ability to analyze 400,000+ files through semantic analysis across multiple repos.

TL;DR

Context-window ceilings cripple many AI code review tools for large codebases, stripping them of the architectural understanding required in 500K+ LOC environments. Platforms that parse entire repositories, such as Augment Code's 400,000+ file capacity, outperform token-bound alternatives that miss dependencies, inject security gaps, and ultimately deliver production risk.

The Enterprise AI Code Review Paradox

Engineering leaders chasing both speed and safety face an uncomfortable paradox. GitHub research shows AI tools can accelerate developer velocity by 25 to 55%, yet Stack Overflow's data reveals tasks take 19% longer once engineers fix "almost-right" AI-generated code. Velocity becomes a vanity metric without context. Real success hinges on governance and architectural comprehension, capabilities most mainstream AI reviewers still lack.

Augment Code's Context Engine maps dependencies across 400,000+ files through semantic analysis, enabling architectural-level code review that identifies cross-service issues before they reach production. Explore Context Engine capabilities →

Why Context Window Limits Become Enterprise Liabilities

Cursor, GitHub Copilot, and Amazon Q Developer each advertise varying context window sizes, but none officially document limits sufficient to handle a 500K+ LOC codebase in a single session. Enterprise repositories of this scale leave zero room for historical commits, documentation, or test suites within standard context windows. The result is missed dependencies, inconsistent style, and undetected security flaws. Understanding context window strategies is critical for enterprise tool selection.

Augment Code's Context Engine counters these limits by mapping dependencies across 400,000+ files, chunking semantically rather than by raw token count.

How Context Window Size Dictates Accuracy in Massive Repositories

The following comparison highlights how leading AI code review tools handle enterprise-scale repositories. Each tool's context window directly impacts its ability to detect cross-service dependencies and maintain architectural consistency.

| Tool | Context Window | Enterprise Limitation |

|---|---|---|

| GitHub Copilot | Limited; exact size undocumented | Omits files over 1 MB; manual selection required |

| Amazon Q Developer | Varies by interface | Character cap blocks architectural analysis |

| Cursor | Large but capped | Cursor automatically indexes and understands project structure; developers are not required to hand-craft project structure docs |

| Sourcegraph Cody | Limited user-defined context via @-mentions | Only 10 repos via @mentions |

Token-bound tools have session-based, but imperfect, persistent state across edits. No published Augment Code case study explicitly quantifies how such tools overlook real-time dependencies across multiple files or commits. Augment Code's semantic chunking links code changes to commit history, learning the rationale behind every modification.

Monorepo vs. Multi-Repository Architecture

Repository architecture dictates which AI pitfalls you'll face. The challenges differ significantly between monorepos and distributed multi-repository setups, requiring different strategies for effective AI code review. Teams evaluating tools should consider architecture-based selection criteria before committing to a platform.

Monorepo-Specific Hurdles

Monorepos exhaust context windows quickly. Winning teams pair AI review with build-system optimizers, applying affected-path analysis and cloud caching to shrink review scope by 80%. Augment Code respects the module boundaries set by Nx, Bazel, or Turborepo, delivering focused reviews for only the impacted paths.

Multi-Repository Complexities

Distributed architectures spanning 50+ repos risk architectural drift. Humans can't mentally juggle every dependency, so AI tools must encode architecture into automated rules that enforce standards. Effective cross-repo dependency mapping becomes essential for maintaining consistency. Augment Code synchronizes microservice refactors, preventing drift through cross-repo coordination.

Enterprise Evaluation Framework for AI Code Review Tools

Speed metrics alone deceive. Gartner warns that generative AI is no cure-all for legacy modernization. Enterprise buyers should prioritize governance strength and architectural intelligence. A robust AI code governance framework provides the foundation for sustainable adoption.

Use DORA Metrics as Your North Star

Measure deployment frequency, lead time including rework, change-failure rate separating AI-generated vs. human code, and time to restore service. The DORA research program provides industry-standard benchmarks for these metrics.

Verify Security Certifications

Security certifications signal whether a vendor meets enterprise compliance requirements. The following table compares certification status across major AI code review platforms. Organizations in regulated industries should prioritize SOC 2-ready tools that meet their compliance requirements.

| Certification | GitHub Copilot | Tabnine | Augment Code |

|---|---|---|---|

| SOC 2 Type II | Type I and Type II | Not officially SOC 2-certified in available primary sources | SOC 2 Type II Certified (Coalfire) |

| ISO/IEC 42001 | Not certified | Not certified | Industry first (August 2025) |

Augment Code's enterprise security certifications include SOC 2 Type II and ISO/IEC 42001, providing the compliance foundation required for regulated industries. Evaluate enterprise security capabilities →

Implementation Workflow: Rolling Out AI Code Review Tools for Large Codebases

Successful enterprise rollouts follow a phased approach that establishes governance before scaling adoption. The following workflow balances risk mitigation with velocity gains.

Phase 1: Establish Baselines and Governance

Before introducing AI code review, teams must understand their current performance and formalize the rules that will govern AI-assisted workflows.

- Capture current DORA metrics

- Catalog technical debt and formalize governance

- Define security thresholds with SOC 2 Type II as the minimum

Phase 2: Pilot with Strict Quality Gates

A controlled pilot reveals how AI code review performs against real enterprise complexity without risking production stability.

- Select 1 to 2 teams representing legacy complexity

- Test real cross-repo scenarios

- Track AI vs. human code outcomes independently

Phase 3: Scale Systematically

Only after validating pilot results should organizations expand AI code review adoption across the engineering organization.

- Target 50% or greater adoption before declaring success

- Monitor DORA trends and business KPIs

- Build an AI-aware security infrastructure in parallel

What to Do Next

The looming 2,500% defect surge should alarm every engineering leader. Choose AI code review tools for large codebases that excel in governance, cross-repo coordination, and architectural insight rather than inflated context windows. Augment Code's Context Engine, validated at 59% F-score and 70.6% SWE-bench accuracy, processes 400,000+ files while maintaining SOC 2 Type II and ISO/IEC 42001 compliance. Run your own benchmarks before committing to any platform, but don't wait until that defect surge hits your production systems.

See how Context Engine handles 500K+ LOC repositories →

FAQ

Related

Written by

Molisha Shah

GTM and Customer Champion