TL;DR

Turn the Auggie CLI into a PR review bot to catch obvious issues automatically, reduce reviewer idle time across time zones, and stop defects that creep in through large, rubber-stamped PRs. Start small, iterate rules like code, measure an effective rate, and scale.

The Problem

Code review is a bottleneck for at least three related reasons:

- Noisy & inconsistent reviews. Reviewers spend cycles on repetitive checks (style, trivial bugs, obvious anti-patterns) instead of high-value architectural feedback.

- Geography & dead time. When authors and reviewers live in different time zones (e.g., California ↔ Australia) there’s long idle time waiting for first human feedback — delaying the pipeline and breaking context continuity.

- Large PR rubberstamping. Under time pressure reviewers skim large PRs and rubber stamp them, letting issues slip into production.

These create frustration for engineers, stress for reviewers, and cost for the business. An automated first pass removes low-variance noise so humans can focus on the hard stuff.

Why it Matters

Ask engineers which part of their jobs they most dread or project managers what causes things to slip. You'll get the same answer: code review. The experience leaves a lot to be desired.

- Engineers: fewer reverts, less context switching, more uninterrupted flow to build.

- Reviewers / tech leads: less cognitive load, clearer signal for tough decisions.

- The business: faster delivery and lower cost of defects (bugs found later cost more).

The goal: restore developer flow and reviewer trust by giving teams a fast, consistent, low-friction first pass that shortens feedback loops (especially across time zones) and reduces risk to production.

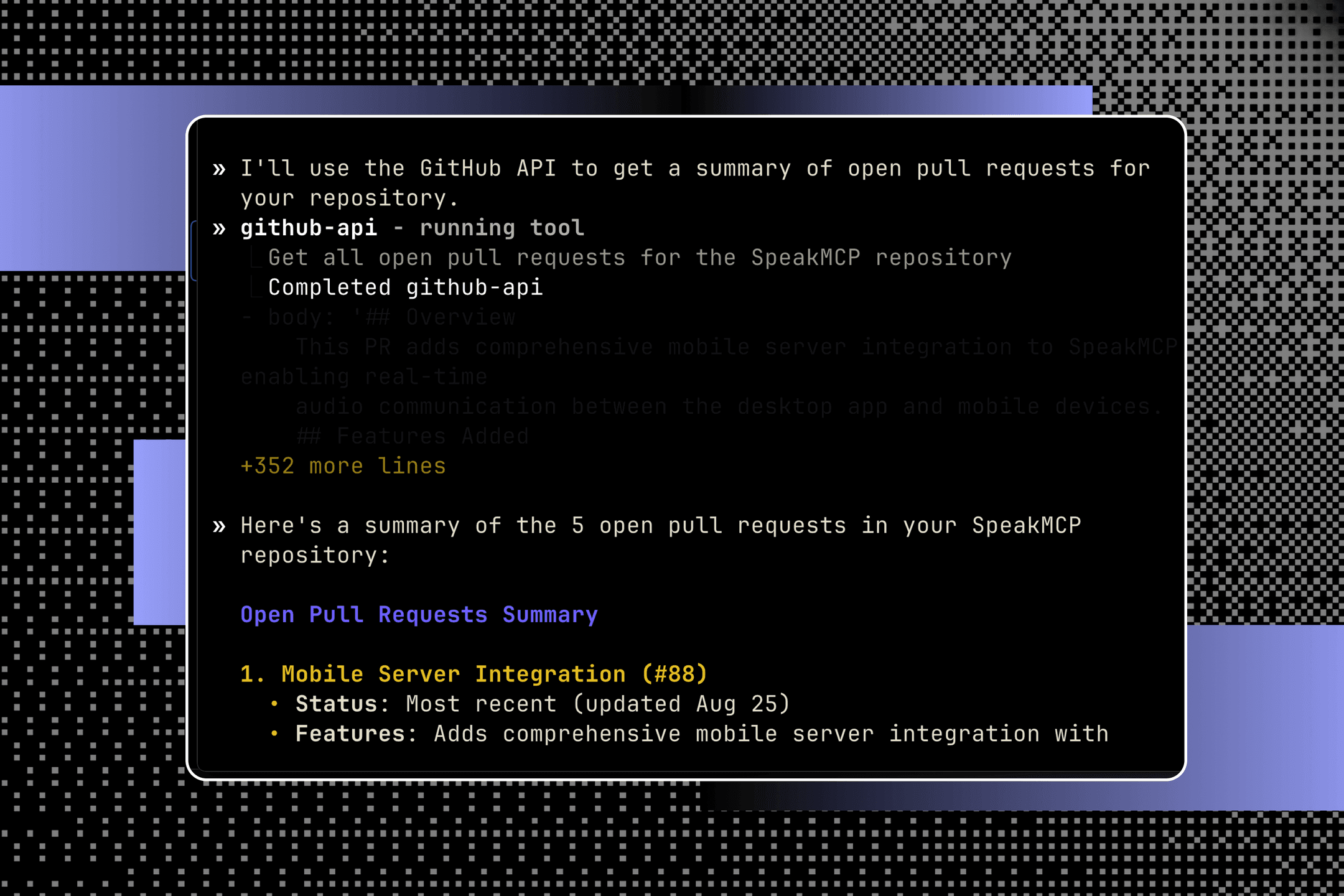

How it Works

It’s very simple to get started. Auggie, our CLI can be installed via npm. Using the --print flag means that Auggie will run the prompt and exit. In contrast, just running auggie with no options will put you in the interactive client.

- Install the Augment CLI Agent:

npm install -g @augmentcode/auggie

- Run it against a PR:

auggie --print "Review this PR: ${link}"

From there, success comes down to what you ask and what rules you apply — which is where prompting and rules come in.

Prompting and Rules

Augment’s Context Engine will provide the Agent fantastic knowledge of the codebase, but it’s still helpful to prompt the Agent well in the first place.

The good news is that using our Prompt Enhancer can turn a poor prompt into a great prompt. What’s better is that you really get to learn what a better prompt looks like, helping you iterate. It’s also going to help you get clarity on the model's understanding in advance.

Here’s a starting point / template you can use to get going:

### Project-Specific Requirements:

- **TypeScript**: Avoid using `any` type, prefer proper type definitions

- **Testing**: All new functions must have corresponding unit tests

- **Documentation**: Public APIs require JSDoc comments with examples

- **Performance**: Flag any synchronous operations that could block the main thread

### Code Quality Standards:

- Use functional programming patterns where appropriate

- Prefer composition over inheritance

- Follow the established naming conventions in the codebase

- Ensure proper error handling for all async operations

### Security Guidelines:

- Validate all user inputs at API boundaries

- Check for potential XSS vulnerabilities in UI components

- Ensure sensitive data is not exposed in logs or error messages

- Review authentication and authorization logic carefully

### Architecture Considerations:

- New components should follow the established design patterns

- Database changes must be backwards compatible

- API changes should maintain backwards compatibility when possible

- Consider the impact on existing integrations

Rules as files

- Rules live as Markdown files in

.augment/rules/(e.g..augment/rules/pr-review.md). - Each rule file contains rule text and examples only — no changelog or metadata.

- Rules are always applied; if you need conditional behavior, encode that logic inside the rule itself.

- When running the CLI, these rules will be automatically picked up so long as they are in

.augment/rules/, else use the --rules flag to provide their location.

Be AI First

AI reviews work best when they are embraced by everyone. A large part of what will make any implementation successful is rules. The aforementioned rules should be treated like living code. If a rule repeatedly misfires, treat it like a bug:

- Reproduce locally with the same diff/context

- Decide: refine the rule, add context, whitelist, or accept the finding

- Ship a small rule change (commit + PR) and canary it to one team

- Find time to review the rules on a regular basis

One of our leading customers described this mindset as pivotal to their success. They treat rule adjustments with the same rigor as fixing software bugs: versioned in source, triaged quickly, and deployed in small increments.

To support this, they stood up a dedicated Slack channel (they called it #augment-hypercare) where engineers flag issues and discuss improvements. The specific name doesn’t matter — what matters is making feedback visible, fast, and collaborative, so the bot feels like part of the team rather than a noisy outsider.

Implementation

1) Local iteration

- Install and run

auggieagainst 10–20 recent PRs - Label a sample of findings as Useful / Harmless / Wrong to estimate an initial Effective Rate (the % of findings that are genuinely useful vs. noise).

- Based on harmless/wrong issues, create 5–10 rules you have confidence in (secrets, critical security checks, clear anti-patterns)

2) CI integration and prompts

Transfer your learning to your pipeline and adjust these steps to suit your Git workflow:

- Add a

pull_requestjob that:- Runs anytime a PR is opened against main

- Checks out the repo

- Installs

auggie - Sets the

GITHUB_API_TOKENenv variable - Runs the agent with your prompt, rules and details of the PR

If you’re using GitHub Actions, you can benefit from our preconfigured workflows.

We recommend everyone check out these workflows as they may provide some ideas for when you could have the flow trigger conditionally:

- 📝 Basic PR Review

- ⚙️ Custom Guidelines Review

- 🚧 Draft PR Review

- 🌿 Feature Branch Review

- 🛡️ Robust PR Review

- 🏷️ On-Demand Review

Important: our Agent has a built-in GitHub tool. If you’re not using GitHub, replace that step with connecting your VCS via an MCP, pass it with --mcp-config.

3) Noise control & UX

AI reviews only work if they stay helpful. To keep the bot from overwhelming reviewers, use these techniques:

- Prompt for one concise summary comment + only a few high-confidence inline comments per PR

- To the earlier point on mindset shift, encourage authors to post in #augment-hypercare to trigger triage

- Review CI logs to see how the Agent thought. If you run in

--quietmode for the benefit of cleaner logs, you can find the most recent session at~/.augment/sessions/*and add to your build artifacts.

5) Rollout & gating

Rather than rollout to everyone immediately, we recommend doing a canary to a single team, measuring, refining and then expanding. Having the integration work successfully in one team will give you more buy-in to go to the next.

Thinking About ROI

Initially we begin by measuring Agent effectiveness. This is highly useful to iterate on our implementation, but it doesn’t tell a great picture of value for the business. For that, post a 2 week pilot gather:

- #PRs (Y), avg iterations/PR, avg reviewer minutes/PR (M), Effective Rate, acceptance rate.

Directional math:

- Minutes saved per PR ≈ reduction in reviewer minutes attributable to the bot (X).

- Weekly minutes saved = X * Y.

- Weekly hours = (X * Y) / 60.

- Weekly $ saved = weekly hours * avg reviewer hourly rate.

- Annualize for a high-level estimate.

Help & Resources

- Docs: Augment CLI Overview

- Run

auggie --helpto see available options and flags

Written by

Matt Ball

Matt is passionate about empowering developers. At Postman, Matt was the first Solutions Architect where he helped build the go-to-market strategy. Matt previously led Professional Services Engineering at Qubit.

Mayur Nagarsheth

Mayur Nagarsheth is Head of Solutions Architecture at Augment Code, leveraging over a decade of experience leading enterprise presales, AI solutions, and GTM strategy. He previously scaled MongoDB's North America West business from $6M to $300M+ ARR, while building and mentoring high-performing teams. As an advisor to startups including Portend AI, MatchbookAI, Bitwage, Avocado Systems, and others, he helps drive GTM excellence, innovation, and developer productivity. Recognized as a Fellow of the British Computer Society, Mayur blends deep technical expertise with strategic leadership to accelerate growth.