TL;DR

Tasklist transforms "AI agent, do X" from a black-box wish into a transparent, measurable workflow. By storing each step as a first-class object (not just markdown bullets), Tasklist lets agents plan, execute, and report on multi-step work while you watch, edit, or stop them mid-run. Sign up, spin up a Tasklist, and start building.

Why We Built Tasklist

The Pain Today

Working with agents today means flying blind. You ask an agent to "refactor the authentication system," and three hours later you're wondering: did it start with the database schema or the API endpoints? Is it stuck on some edge case? How much work is left?

Most tools store plans in plain markdown; agents get distracted and forget parts of the task. As our co-founder Guy Gur-Ari explains it:

"Cramming complex workflows into a single prompt leads to bad results: the agent gets distracted and forgets to do parts of the task."

This is both a UI and architectural problem. The UI needs to surface what the agent is thinking, but more fundamentally, the underlying data model needs to support structured, stateful tasks that agents can reliably track and execute.

Design Goals

We needed a transparent surface where agents plan, execute, and report in a way that mirrors how you chunk work into 5-15 minute steps. We wanted opinionated constraints: strict status transitions that keep agents honest and debuggable.

Most importantly, we built for the future. Each task is first-class data, not just text, enabling future sub-agents to verify work and support cross-agent collaboration. Because large-language-model attention fades after a few thousand tokens, Tasklist carries the long-horizon plan while each task stays within a manageable context window.

Like state machines in software engineering, structured task progression keeps agents on track through complex workflows, preventing the "single prompt" failure mode that leads to drift and incomplete work.

Here's how this works in practice:

North-Star Use Cases

The agent typically creates a Tasklist automatically when it encounters complex, multi-step problems. You can also initiate one manually with prompts like "Start a Tasklist to refactor this authentication system."

Real User Examples:

- Automated Code Review: Break down complex review criteria into structured tasks, ensuring the agent addresses each concern systematically rather than getting distracted.

- Ticket Decomposition: The agent can break down Jira or Linear tickets into structured tasks, execute them step by step, and post progress comments back to the original ticket.

How Tasklist Compares

Most AI coding tools have recognized the need for planning, but they've taken fundamentally different architectural approaches:

Markdown-based planning (Windsurf, Others)

- Approach: Plain text checklists that agents can "check off"

- Limitations: No programmatic access to task state, no analytics, difficult to build tooling around

Chat-based planning (GitHub Copilot, Claude)

- Approach: Planning happens in conversational context

- Limitations: Plans get lost in conversation flow, no persistent task state

Cursor's composer multi-file edits

- Approach: Agent plans changes across multiple files in a single operation

- Limitations: Planning is tied to single editing session, no task decomposition for complex workflows, limited visibility into agent's step-by-step reasoning

- This is more similar to Augment Code’s Next Edit feature than a strict planning and task decomposition surface.

Augment Code’s structured approach with Tasklist

- Approach: Tasks as first-class typed entities with a strict lifecycle

- Benefits: Programmatic access, real-time updates, analytics, cross-session persistence

Why structure matters

The difference isn't just UI polish—it's about what becomes possible:

With other planning tools:

Files to modify:

With structured tasks:

Augment’s structured approach enables:

- Real-time progress tracking (you see state changes as they happen)

- Cross-session persistence (move tasks between conversations)

- Future automation (validation sub-agents, cross-agent collaboration)

- Measurable outcomes (task completion rates, time-to-completion analytics)

Trade-offs of this approach:

- More overhead: Creating structured tasks requires more initial setup than just writing markdown or planning file changes

- Opinionated workflow: You can't deviate from our state machine (this is intentional)

- Learning curve: Users need to understand the task lifecycle model

But the payoff: Agents that stay on track through complex workflows, and visibility into exactly what they're planning to do next.

Architecture at a Glance

Structured Data Model

Moving from markdown to structured schema unlocks deterministic metrics and analytics. Each task carries typed metadata that future systems can reason about, unlike free-form text that requires parsing and interpretation. Every task emits an approved_at event so reviewers (or verifier sub-agents) can confirm work.

Opinionated State Machine

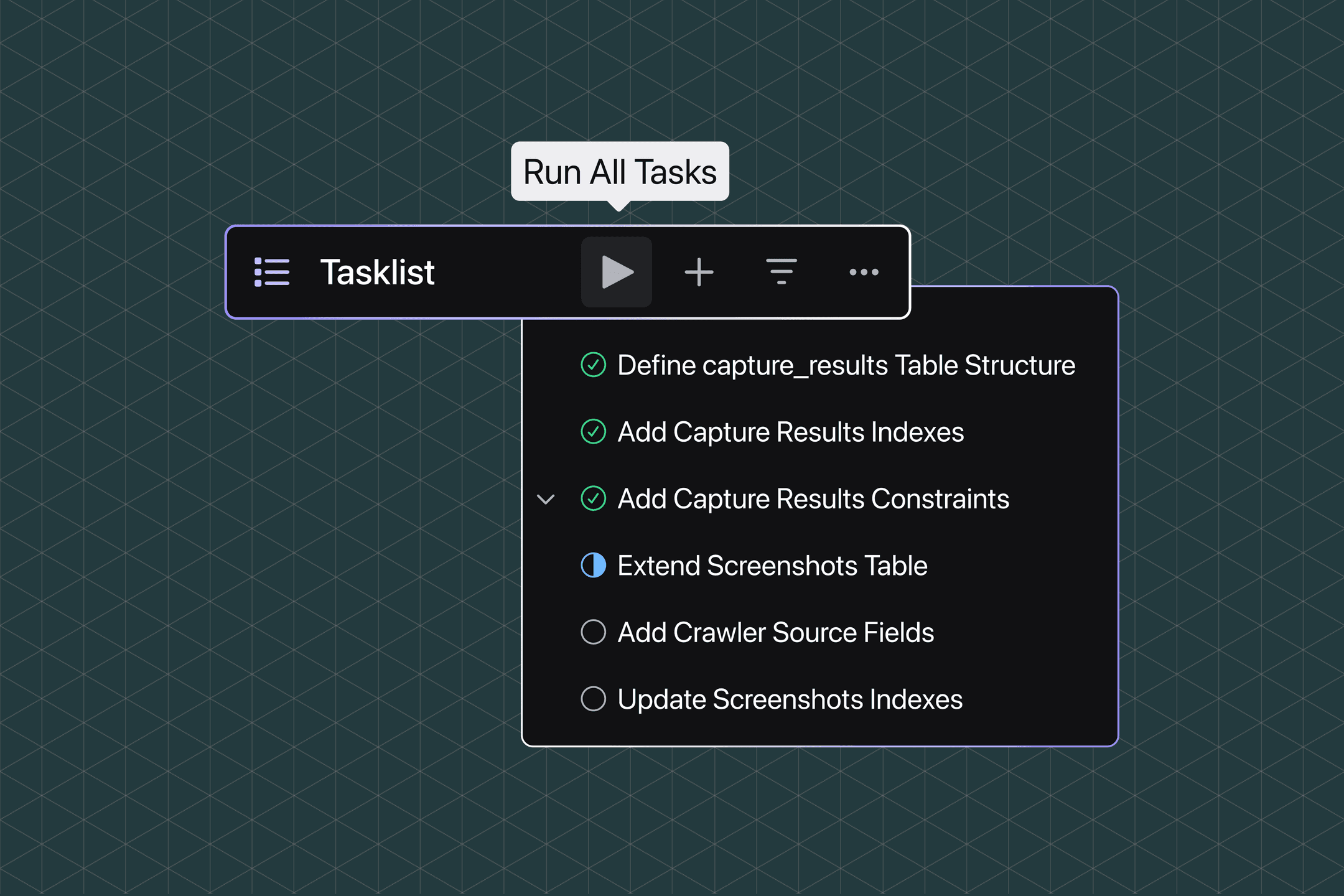

Tasks follow a strict lifecycle: todo → in_progress → finished / cancelled. The UI reflects this with clear visual states—grey ▶ (not started), blue ◐ (in progress), green ✓ (done). You can run tasks individually or trigger sequence execution with the play-all button at the top.

As the agent flips a task from ◐ to ✓, the UI streams that update in real time—so you always know exactly what it's doing.

When things go sideways, you can stop in-progress tasks with the red ⏹ button and redirect the agent immediately.

UI Model Binding

The interface stays in sync with the data while the UI continues to improve. You can move Tasklists between chats and keep all long-horizon context with you.

Extensibility Hooks

We built Tasklist to work beyond just the web interface, making it extensible to a future CLI (hint, hint) or other surfaces we haven’t built yet.

Product Decisions That Matter

• Visibility over "magic": The Tasklist forces agents to show their plan upfront - no more black-box execution where you wait hours to see what went wrong. This required visibility means you can stop or correct it early, before it has the chance to go off the rails.

• Measurability as a feature: Structured tasks produce metrics we can graph—critical for iteration.

• Planning within LLM limits: Short tasks keep context windows reasonable while the list preserves long-horizon intent.

• Structure prevents drift: Like state machines in software engineering, structured task progression keeps agents on track through complex workflows, preventing the "single prompt" failure mode.

• Why we're not launching an IDE: Workflow surfaces > heavyweight IDE plugins.

Where to Dive Deeper

Tasklist is now live and it's fundamentally changed how we work with autonomous agents. The shift from unstructured planning to typed, stateful tasks creates accountability and measurement that didn't exist before.

Multiple teams at Augment are converging on the same insight: structured workflows are the key to reliable agent automation. Tasklist is our production-ready implementation of this principle.

Sign up, spin up a Tasklist, and start building.

Written by

Eric Hou

Eric Hou is currently building at Augment after engineering at Applied Intuition and working as a Technical Systems Researcher. His background includes AI research at Berkeley, where he developed computer vision systems with significantly higher accuracy and lower computational requirements. Eric's expertise spans blockchain technology, autonomous vehicles, and distributed systems, having authored industry standards for mobility technology and advised multiple startups.