Three PRs before lunch. Mass tab-completion. Functions materializing from thin air. You felt like a 10x engineer.

Then your tech lead blocks the PR because you called a method that was deprecated six months ago. The CI pipeline fails on a circular dependency the AI didn't see. You spend the afternoon debugging code you didn't write and don't fully understand.

Does this sound familiar? If you're nodding, you're not alone.

I talk to engineering teams every week at Augment. CTOs, staff engineers, team leads. And the same story keeps coming up. The AI honeymoon is over. What's left is a hangover.

What I'm hearing from teams

The pattern is consistent enough to be uncomfortable.

Teams tell me they're opening significantly more Pull Requests than they were a year ago. Some have doubled their PR volume. On paper, that's a win. Ship faster, win markets.

But here's what they say next: review cycles are getting longer, not shorter. Senior engineers are underwater. The queue keeps growing.

One engineering director put it bluntly: "We didn't get faster. We just moved the traffic jam."

Industry data backs this up. Reports from developer productivity teams show that while AI tools accelerate code generation, the time spent in review and debugging often increases to compensate. The productivity gain, for many teams, is smaller than the dashboards suggest.

When I dig into bug tracking with teams, a surprising number say they're spending more time on fixes now than before AI adoption. Not because the tools are bad, but because the tools are fast and context-blind. That's a dangerous combination.

The context gap

After dozens of these conversations, the failure mode became clear.

Most AI coding tools operate on what I'd call the "intern with amnesia" model. They see the file you have open. Maybe a few adjacent tabs. They have no idea that:

UserFactoryhas a weird coupling with the billing service that broke prod twice last quarter.- Your team banned that ORM pattern after the June incident.

- The method they're suggesting was deprecated in v2.3 and removed in v2.5.

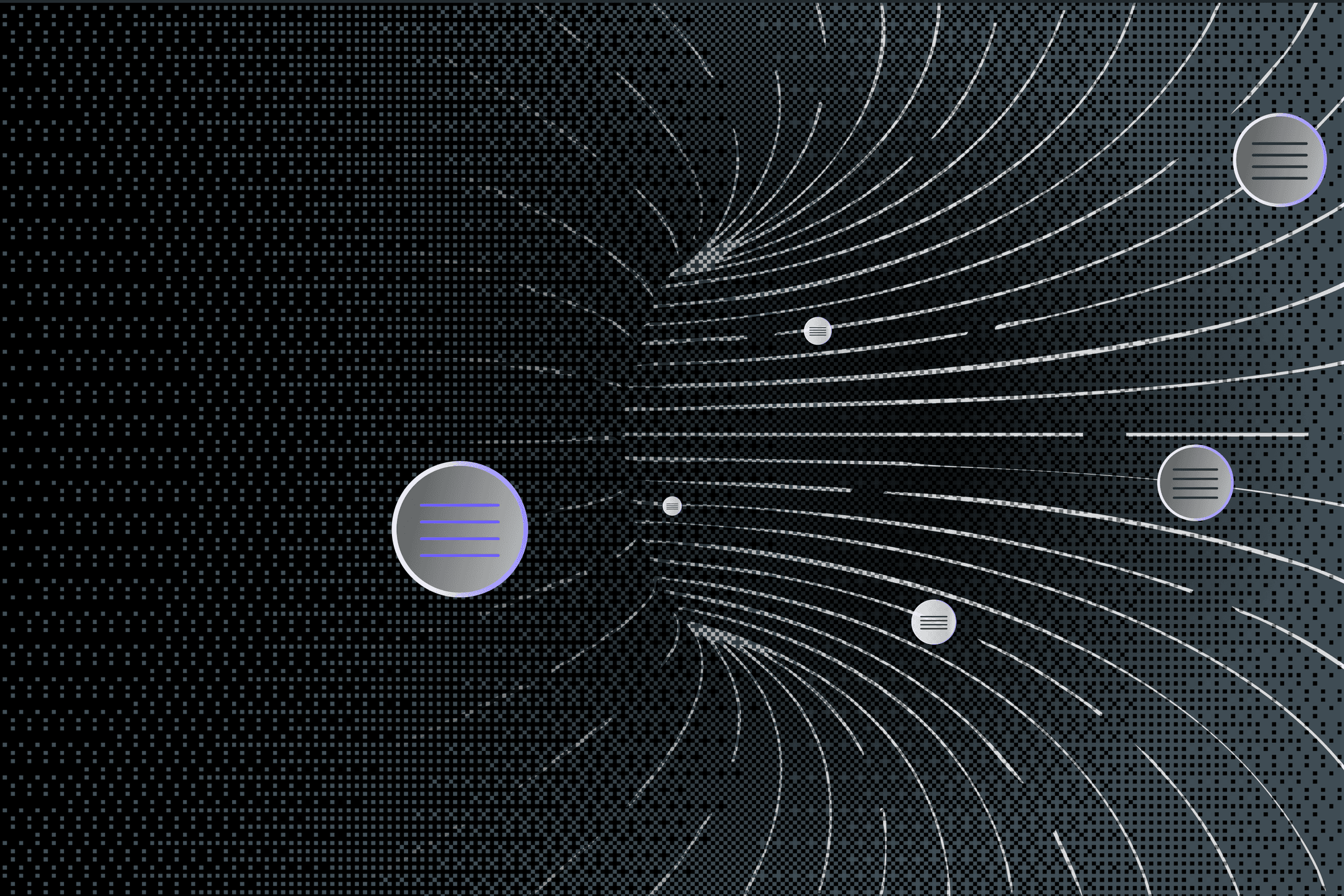

They're topologically blind. They see nodes, not edges. They see files, not systems.

This creates a specific failure mode. The code looks correct. It passes the syntax check. It even runs locally. But it violates assumptions that exist nowhere in the code itself, only in your team's collective memory.

When I ask developers why they don't fully trust AI-generated code, the answer is almost never "hallucinations" or "security." The overwhelming majority point to the same thing: the AI doesn't understand our codebase. It doesn't know how we do things here.

The LGTM reflex

Here's what actually worries me.

When you're drowning in AI-generated PRs, review quality degrades. I've watched it happen. Developers start pattern-matching instead of reasoning. "That looks roughly like what we'd write. Approved."

I call this the LGTM reflex, and it's more common than anyone wants to admit. Teams use AI to summarize PRs, then approve based on the summary without reading the diff. One engineer told me their team's unofficial rule is "if it's under 200 lines and the tests pass, just merge it."

We're optimizing for the appearance of velocity while accumulating invisible debt.

The developers who tell me they have "serious quality concerns" about AI-generated code aren't being paranoid. They're noticing that the codebase is slowly becoming incoherent. Not broken, just... drifting. Each AI-generated function is locally reasonable and globally arbitrary.

Why your current tools can't save you

The standard response is "just review more carefully" or "add more static analysis." I've tried making this argument to teams. It doesn't hold up.

Linters catch syntax, not architecture. A linter won't tell you that you're bypassing the retry wrapper your platform team spent a quarter building. The code is valid. It's just wrong.

Manual review doesn't scale. If your team generates 2x the code, you can't hire 2x the senior engineers to review it. And the engineers you have are already rubber-stamping because they're underwater.

File-level AI is the problem, not the solution. Using AI to review AI-generated code doesn't help when both the generator and the reviewer have the same blind spot: neither can see beyond the current file.

The tooling we have was designed for a world where humans wrote code at human speed. That world is gone.

What actually needs to change

The fix is conceptually simple: AI needs to understand your codebase the way a senior engineer with two years of tenure understands it.

Not just "what functions exist" but "how things connect." Which services call which. What patterns your team uses for error handling. Where the bodies are buried.

This means treating codebases as graphs, not file collections. A semantic understanding that knows Function A calls Service B, which depends on Schema C, which was last modified by the payments team and has specific constraints documented in a Notion page somewhere.

When AI has this context, quality shifts from reactive to preventative.

Why we built Augment Code Review

This is exactly why we built Augment Code Review.

Not another auto-approve bot. Not an AI that pretends to be a senior engineer and merges things on your behalf. We've seen where that leads.

Instead, we built something that makes you a better reviewer.

Here's the problem with most AI code review tools: they flag everything. Nitpicks about variable names. Style inconsistencies. Missing docstrings. You end up with 40 comments, 38 of which are noise. The two that matter get buried.

Augment Code Review does the opposite. Because it understands your entire codebase through our Context Engine, it can distinguish between "this violates a style preference" and "this will break the payment flow in production."

It surfaces the problems that actually matter:

- "This function duplicates logic that already exists in

utils/retry.ts, but handles the edge case differently." - "This import was deprecated in v2.3. The replacement is

newAuthClientfrom the security module." - "This pattern bypasses the rate limiter your team implemented last quarter."

The goal isn't to replace your judgment. It's to make sure you're spending your judgment on the right things. When you're reviewing twice the PRs you were a year ago, you can't afford to wade through noise. You need a filter that knows what matters in your codebase, not codebases in general.

The human still reviews. The human still merges. But the human isn't doing it blind anymore.

We evaluated seven of the most widely used AI code review tools on the only public benchmark for AI-assisted code review. Augment Code Review delivered the strongest performance by a significant margin.

What You Can Do This Week

If this resonates, three things I've seen help:

1. Audit your last five production bugs. For each one, ask: "What file or document, if the AI had seen it, would have prevented this?" Start curating that context explicitly. Don't assume your tools will find it.

2. Kill the LGTM reflex. Make it a team norm: if you didn't read the diff, you don't approve. AI summaries are for triage, not judgment. This feels slower. It is slower. It's also the only way to maintain code quality at AI-generation speeds.

3. Make implicit standards explicit. Your team has opinions. No nested ternaries. Always use the custom fetch wrapper. Never call the database directly from handlers. These rules exist in your head but not in any document. Write them down in a format your tools can actually use.

The metric that matters

The leaderboard isn't "PRs opened per week." It's not "lines of code generated."

It's "months since the last mass refactor." Time between architectural resets. How long your codebase stays coherent under continuous AI-assisted modification.

That's the number that predicts whether your team is actually shipping faster or just generating tomorrow's technical debt at unprecedented speed.

The AI coding hangover is real. But it's a tooling problem, not a discipline problem. AI started by writing code without understanding codebases. Now we're building tools that actually understand context, so your team can review with confidence instead of crossing their fingers.

Written by

John Edstrom

Director of Engineering

John is a seasoned engineering leader currently redefining how engineering teams work with complex codebases in his role as Director of Engineering at Augment Code. With deep expertise in scaling developer tools and infrastructure, John previously held leadership roles at Patreon, Instagram, and Facebook, where he focused on developer productivity and platform engineering. He holds advanced degrees in computer science and brings a blend of technical leadership and product vision to his writing and work in the engineering community.