TL;DR

Enterprise development teams lose 71 days annually to coordination overhead because fragmented AI tools (ChatGPT for PMs, GitHub Copilot for developers, separate QA tools) cannot preserve architectural context across handoffs. This implementation guide demonstrates unified workflow management deployment using persistent 200k+ token context engines—validated through 70% enterprise win rates over single-purpose coding assistants.

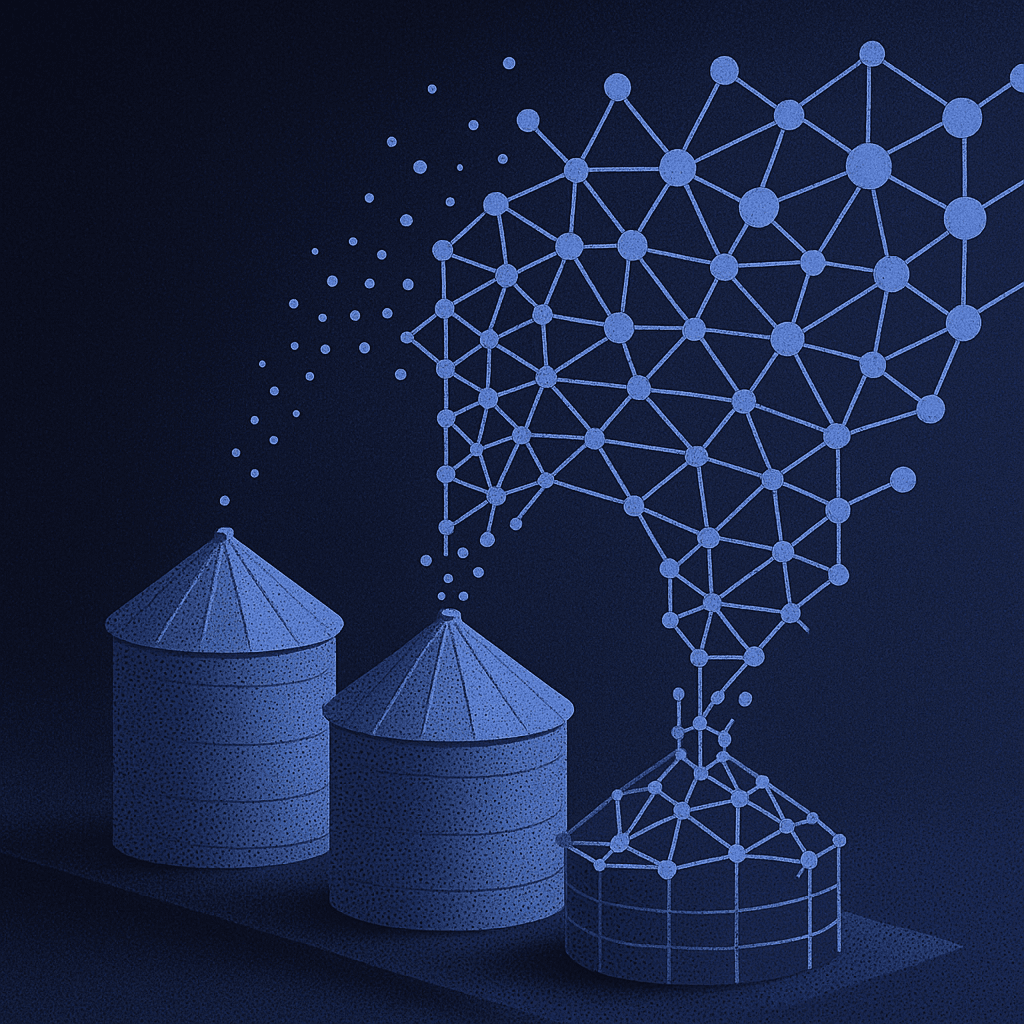

Why Traditional AI Tools Create Development Workflow Fragmentation

Modern development teams face significant coordination overhead when using fragmented AI tools across the software development lifecycle. Product managers draft requirements in ChatGPT, developers implement features using GitHub Copilot's limited context, and QA engineers generate tests using separate tools with zero awareness of architectural decisions.

This disjointed approach creates what Harvard Business Review analysis identifies as "AI-reinforced organizational silos" where automation tools actually increase coordination overhead rather than reducing it. Each handoff requires complete context reconstruction, forcing developers to spend hours understanding requirements that could be preserved through unified systems.

The Hidden Cost of Context Switching in Development Workflows

The productivity impact compounds across enterprise teams. Gartner research shows 78% of organizations have formal developer experience initiatives, yet coordination losses from tool fragmentation remain largely unmeasured. Consider a typical feature development cycle:

- PM Phase: Product manager uses ChatGPT to draft Jira requirements without system context

- Development Phase: Developer copies code snippets into GitHub Copilot for guidance, starting from zero context

- QA Phase: QA engineer generates test cases using different AI tools, missing edge cases the developer's implementation already addresses

This fragmented approach creates substantial inefficiencies that compound across every development cycle.

How Unified AI Workflow Management Platforms Solve Context Problems

Unified platforms address coordination handoffs by maintaining persistent context through advanced architecture designed for enterprise-scale development. Unlike traditional AI assistants that operate in isolation, these platforms understand relationships between requirements, implementation decisions, and testing strategies.

Augment Code's Context Engine Architecture Advantage

Augment Code's proprietary context engine delivers comprehensive application understanding through several key differentiators:

| Feature | Augment Code | GitHub Copilot | Traditional Tools |

|---|---|---|---|

| File Support | 400k+ files | Limited | Single file focus |

| Persistence | Cross-session memory | Session isolation | No persistence |

| Integration | PM, Dev, QA unified | Developer-only | Tool-specific silos |

| Security | ISO/IEC 42001, SOC 2 | Basic enterprise | Varies |

Enterprise AI Workflow Management Implementation Guide

Engineering teams can systematically deploy unified AI workflow management platforms, though implementation requires careful planning around enterprise constraints and architectural requirements.

Phase 1: Current State Assessment and Baseline Measurement

Map existing PM→Dev→QA workflows to document coordination overhead and establish baseline metrics. Teams typically discover hidden coordination costs requiring several weeks of analysis before accurate measurements emerge.

Key Assessment Areas:

- Context reconstruction time per handoff

- Duplicate effort percentage across phases

- Architectural knowledge loss between team members

- Developer onboarding velocity metrics

Phase 2: Technical Architecture Planning

Unified AI workflow management requires specific technical capabilities that traditional code assistants cannot provide:

Core Technical Requirements:

Phase 3: Deployment Strategy and Integration Challenges

Augment Code excels with standard technology stacks (React, Node.js, Python) but may require extended integration time with legacy systems or highly customized build configurations. Context persistence across microservices requires careful boundary configuration and service interaction pattern optimization.

Implementation Reality Considerations:

- Context boundary optimization for microservices architectures

- Service relationship mapping for distributed systems

- Integration complexity with existing toolchain ecosystems

- Security framework alignment with compliance requirements

Comparing Unified Platforms vs. Traditional AI Coding Tools

The architectural differences between unified AI workflow management platforms and traditional coding assistants create significant capability gaps that affect enterprise adoption.

GitHub Copilot Limitations in Enterprise Workflows

While GitHub Copilot serves millions of users with basic code completion, several fundamental limitations prevent effective workflow unification:

Context Architecture Constraints:

- Limited Token Windows: 8k token capacity is insufficient for enterprise codebase understanding

- Session Isolation: No persistence across tools, team members, or development phases

- Single-Role Focus: Designed for individual developers, not cross-functional workflows

- Basic Security Framework: Lacks advanced enterprise governance and compliance features

Augment Code's Competitive Advantages in AI Workflow Management

Augment Code addresses these limitations through purpose-built enterprise architecture:

Measuring Business Impact of Unified AI Workflow Management

Enterprise deployments of unified AI workflow management platforms demonstrate measurable productivity improvements across multiple dimensions.

Development Velocity and Efficiency Metrics

LinearB analysis of 400+ development teams shows unified platforms deliver:

- 19% faster development cycles through reduced context switching

- 71 days annually saved per team via persistent context awareness

- Reduced coordination overhead between PM, development, and QA phases

Enterprise Adoption and Win Rate Analysis

Augment Code achieves 70% win rates over GitHub Copilot in enterprise evaluations through superior context understanding and cross-functional workflow support. Customer feedback consistently highlights that suggestions "feel like they came from the development team" through context engine intelligence.

Customer Evidence Patterns:

- "Helps evolve mature, messy, production-level codebases," where traditional tools struggle

- "Understands the codebase better than competitors" through architectural awareness

- "Reduces dependency on senior engineers for routine tasks" via context sharing

Security and Compliance in Enterprise AI Workflow Management

Enterprise adoption of AI workflow management platforms requires robust security frameworks addressing data governance, compliance requirements, and organizational risk management.

Augment Code's Enterprise Security Architecture

Augment Code implements comprehensive security controls designed for regulated enterprise environments:

Security Framework Components:

- ISO/IEC 42001 Certification: AI-specific management systems addressing model behavior monitoring and algorithmic decision management

- SOC 2 Type II Compliance: Security trust services covering availability, processing integrity, confidentiality, and privacy

- Customer-Managed Encryption Keys: Non-extractable API architecture preventing unauthorized data exposure

- Zero Training Policy: Never trains on customer proprietary code or data

NIST AI Risk Management Framework Alignment

The NIST AI Risk Management Framework establishes four essential functions for enterprise AI governance that unified platforms should satisfy by design:

- Govern: Policies and oversight for responsible AI development and deployment

- Map: Understanding AI system context, capabilities, and associated risks

- Measure: Ongoing assessment of AI system performance and risk factors

- Manage: Response to identified risks and continuous improvement processes

Best Practices for AI Workflow Management Platform Selection

Engineering teams evaluating unified AI workflow management platforms should assess capabilities across several critical dimensions that affect long-term success and ROI.

Technical Evaluation Criteria

Context and Integration Capabilities:

- Token capacity supporting enterprise-scale codebases

- Real-time indexing with live embedding updates as code evolves

- Cross-session memory preserving architectural decisions and team patterns

- Integration protocols supporting existing toolchain (Jira, GitHub, Slack, CI/CD)

Security and Compliance Requirements:

- Enterprise-grade certifications (ISO/IEC 42001, SOC 2 Type II)

- Customer-controlled encryption and data governance policies

- Audit trails and monitoring capabilities for regulatory compliance

- Zero training policies protecting proprietary intellectual property

Cost-Benefit Analysis Framework

Unified AI workflow management platforms require premium investment compared to basic coding assistants. Augment Code's pricing (~$30-50/month versus GitHub Copilot's $10) reflects enterprise-grade capabilities and infrastructure requirements.

ROI Calculation Factors:

- Reduced coordination overhead between development phases

- Accelerated developer onboarding and productivity ramp

- Improved code quality through architectural consistency

- Enhanced developer retention through improved experience

Preparing for Autonomous Development with AI Workflow Management

Gartner identifies Agentic AI as the #1 strategic technology trend for 2025, indicating rapid evolution from current AI assistants toward autonomous development capabilities. Organizations implementing unified workflow management platforms position themselves for this transition.

Evolution Timeline for Autonomous Development

- Current State (2024): AI assistants providing code suggestions and documentation support

- Near-term (2025-2026): Autonomous task execution handling complete feature implementation cycles

- Advanced (2026-2027): Fully autonomous agents managing planning, coding, testing, and deployment workflows

Technical Prerequisites for Autonomous Development

Autonomous development requires foundational infrastructure that unified platforms provide:

- Context persistence across sessions and team members

- Cross-functional workflow understanding beyond code generation

- Enterprise security frameworks supporting autonomous operations

- Memory systems accumulating organizational and project knowledge

Augment Code delivers these architectural requirements while traditional AI assistants lack the foundation for autonomous agent deployment across PM, development, and QA functions.

Transforming Development Productivity Through Unified AI Workflow Management

Unified AI workflow management platforms eliminate the coordination overhead that fragments modern development teams. While traditional tools like GitHub Copilot provide basic code completion within limited contexts, enterprise teams require comprehensive solutions that understand architectural complexity and maintain persistent context across development phases.

Augment Code's advanced Context Engine, Claude model integration, and real-time indexing capabilities address the fundamental limitations of fragmented AI tools. Through persistent context awareness and cross-functional workflow support, development teams achieve measurable productivity improvements while preparing for the autonomous development future.

The window for architectural decisions continues to narrow as autonomous development capabilities advance. Teams establishing unified AI workflow management infrastructure now will compound productivity gains while competitors struggle with tool fragmentation and coordination overhead.

Ready to transform development team productivity? Experience how Augment Code's unified AI workflow management platform eliminates context switching and coordination overhead. Install Augment Code today and discover why enterprise teams achieve 70% win rates over traditional coding assistants through comprehensive context intelligence and workflow unification.

Frequently Asked Questions

Written by

Molisha Shah

GTM and Customer Champion