TL;DR

Enterprise AI coding deployments fail 80% of the time because organizations treat them as technology installations rather than systematic process transformations requiring governance frameworks and quantified ROI measurement. This implementation guide demonstrates audit-to-scaling methodology using repository-wide context analysis, tiered autonomy controls, and validated productivity benchmarks—proven across distributed engineering organizations achieving 26% pull request throughput improvements.

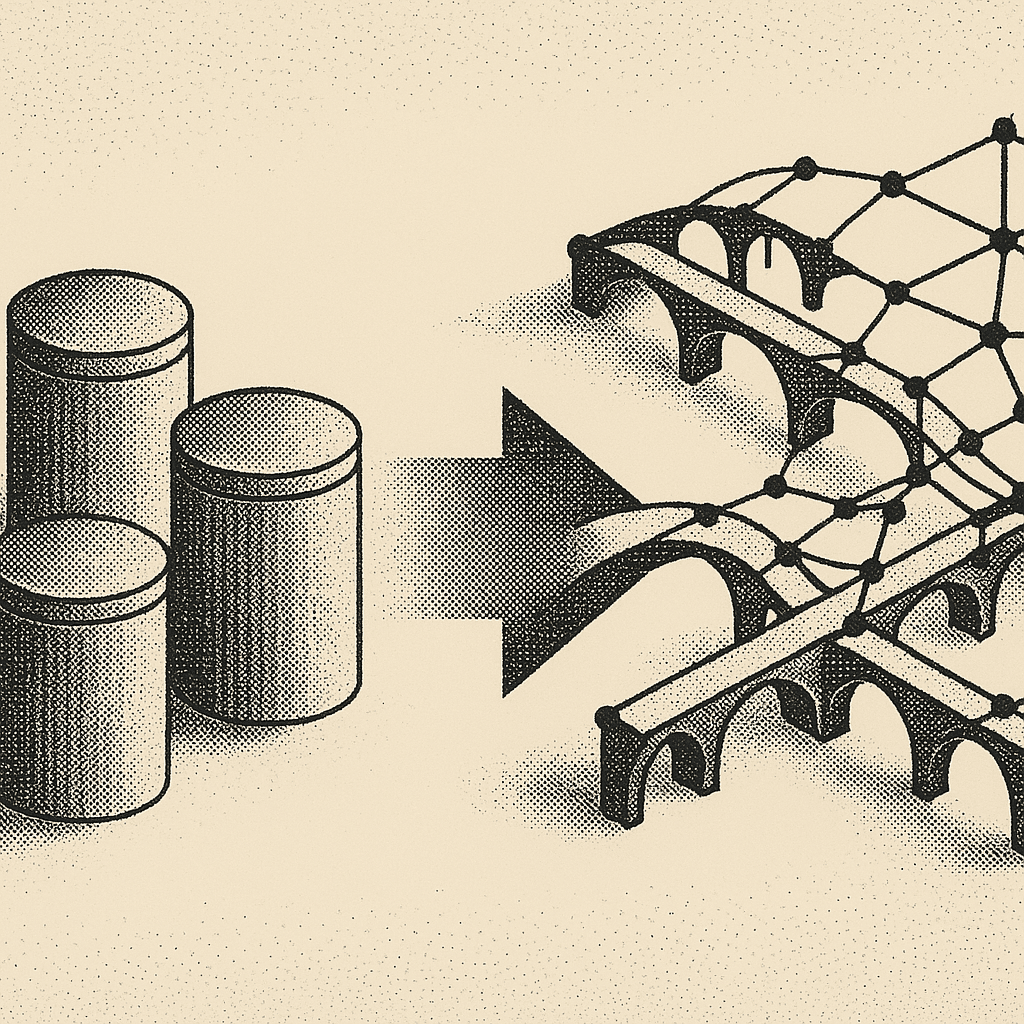

Remote agent architectures enable engineering organizations to distribute AI-powered coding capabilities across distributed teams, automating complex workflows without constant supervision. While 84% of developers currently use or plan to use AI tools, roughly 80% of companies report no material impact on earnings from their AI investments. The difference lies in systematic implementation strategy.

Understanding Repository-Wide AI Systems

Repository-wide AI systems differ fundamentally from basic code completion tools. These systems maintain comprehensive codebase understanding and execute complex tasks across multiple services without constant supervision. Organizations treating AI deployment as a process challenge rather than a technology installation achieve measurably better outcomes.

GitHub's research demonstrates developers complete tasks 55% faster with AI assistance. Enterprise case studies show 10.6% increase in pull requests and 3.5-hour reduction in cycle time. The market trajectory is clear: Gartner projects 75% of enterprise software engineers will use AI code assistants by 2028, up from less than 10% in early 2023. This 7.5x growth represents one of the fastest enterprise technology adoption curves documented for developer productivity tools.

The framework outlined below addresses the remote agent use case through systematic deployment of repository-wide AI coding capabilities, covering initial setup through organization-wide scaling, including governance frameworks, security requirements, and quantified ROI measurement strategies.

How to Deploy AI Coding Assistants in 15 Minutes

Engineering teams can extract immediate value from enterprise AI coding assistants with these practical setup steps:

- Enterprise Authentication: Connect to existing identity systems through enterprise AI coding assistant dashboards with SAML/OAuth integration. Most platforms support single sign-on integration in under 5 minutes.

- Repository Integration: Configure repository access permissions matching existing Git workflows. Grant the AI assistant read access to target repositories through version control system API settings.

- Context Validation: Test repository-wide understanding by asking the AI assistant to explain service architecture or identify dependencies between modules. Verify it can access and understand codebase structure.

- Immediate Value Demo: Generate a complete API endpoint with validation, error handling, and comprehensive tests in under 2 minutes by providing context like: "Create POST /v2/users endpoint following existing patterns in

/services/user/with full Jest test suite."

This quick validation demonstrates the core capability that distinguishes enterprise AI coding assistants from basic code completion tools: comprehensive repository context enabling junior developers to implement complex features immediately.

Step 1: Audit Tribal Knowledge and Development Bottlenecks

McKinsey's research identifies knowledge democratization as critical: "Agentic organizations democratize knowledge by embedding AI into day-to-day workflows." Systematic bottleneck identification reveals deployment priorities and maximizes return on AI investment.

Knowledge Silo Audit Checklist

Identify critical bottlenecks using these measurable indicators:

- Services/modules with ≤2 active contributors (analyzable via git blame)

- Pull requests idle >48 hours awaiting expert review

- Communication patterns showing expert dependency bottlenecks

- New hire productivity timeline >2 weeks

Quantified prioritization context:

Code review bottlenecks experienced explosive growth from 39% to 76% enterprise adoption between January-May 2025, representing 97% growth in 4 months. This makes automated code review the highest-impact deployment target.

Implementation Priority Matrix

Focus AI deployment on these high-value scenarios:

- High frequency, low complexity: Documentation generation, configuration updates

- Medium frequency, high impact: Cross-service refactoring, dependency updates

- Low frequency, critical path: Architecture decisions, security reviews

The audit reveals where AI coding assistants provide maximum impact by converting tribal knowledge into accessible, queryable expertise distributed across development teams.

Step 2: Set Up Repository-Wide Context Engine

Modern enterprise AI tools maintain comprehensive codebase understanding across entire repositories with significantly larger context windows than early implementations. Advanced repository scanning capabilities provide comprehensive codebase architecture analysis, offering enhanced context processing compared to standard coding assistants.

Enterprise AI Implementation Approach

- Authentication: Integration with enterprise identity systems (SAML, OAuth) through enterprise AI coding assistant dashboards

- Repository scanning: Comprehensive codebase analysis through automated repository indexing

- Context validation: Dependency graph accuracy verification through git analysis tools

Context Scope Configuration

- Include: Source code, documentation, configuration files, test suites

- Exclude: Secrets, binaries, generated assets via

.gitignorepatterns - Monitor: Index currency through automated re-scanning schedules

Security Boundaries

Repository-level access controls matching existing Git permissions provide enterprise-grade isolation. ISO/IEC 42001:2023 compliance requires comprehensive AI system impact assessments and continuous performance review processes.

Performance monitoring:

Context window usage, query response times, and accuracy rates against established baselines identify configuration improvements for distributed team deployment. Advanced enterprise AI coding assistants can process more repository context than basic coding assistants.

Step 3: Create On-Demand Remote Agent Tasks

Repository-wide context access transforms junior developers into effective contributors through comprehensive codebase knowledge. GitHub's agent capabilities allow developers to "refactor code into several different functions for better readability" while agents "work autonomously in the background to implement these changes."

Effective AI Coding Assistant Prompt Structure

Consider this scenario: A junior developer adds an API endpoint to a billing service without deep domain knowledge.

Documented Workflow

The implementation follows this pattern: AI assistant generates comprehensive diff → developer reviews architectural decisions → approval triggers implementation → automated tests verify integration.

Measured impact:

Case study analysis shows reduced coding time allocation, with engineers spending less time on boilerplate generation and indications that this may allow for more creative problem-solving.

Implementation boundaries:

Effective for standard patterns and established architectures. Novel system designs, custom frameworks, or greenfield architecture decisions require senior oversight beyond AI capabilities.

Step 4: Automate Large-Scale Refactors Across Squads

GitHub's autonomous capabilities enable enterprises to "delegate open issues to GitHub Copilot and let coding agents write, run, and test code in the background" for systematic refactoring across multiple repositories.

Cross-Service Refactoring Example

Replace deprecated logDebug() with logger.debug() across 9 microservices using enterprise AI coding assistants.

Systematic AI Assistant Approach

- Impact analysis: Use AI to generate dependency graph identifying affected services

- Batch processing: Create separate branches per service to isolate changes through multi-repo capabilities

- Automated testing: Run full test suites to verify functional compatibility

- Distributed review: Generate pull requests for each team's repositories

Distributed Team Advantages

AI agents operate continuously, preparing code reviews for teams across time zones. Teams receive prepared diffs rather than coordination overhead.

- Measured throughput: Enterprise implementations report 20-30% speed improvement for systematic refactoring tasks compared to manual coordination across multiple teams.

- Risk mitigation: AI assistant dry-run modes with comprehensive diff previews provide validation workflows. Microsoft's autonomous AI agents targeting technical debt focus on automated legacy application modernization with built-in validation workflows.

Step 5: Implement Governance, Security, and Compliance

Enterprise deployment requires comprehensive governance frameworks. Deloitte's analysis emphasizes that ISO/IEC 42001:2023 "builds upon control frameworks that many organizations already have in place, including data governance, IT, security, privacy, enterprise risk management, and internal audit."

Certification Requirements

- ISO/IEC 42001:2023: AI management system standards for enterprise environments

- SOC 2 Type II: Security and operational controls for cloud-based systems

- GDPR/CCPA: Data privacy compliance for code repositories containing user data

Enterprise AI Implementation Architecture

- Non-extractable APIs: Code analysis without data persistence in external systems

- Audit logging: Comprehensive tracking of AI interactions and code modifications

- Role-based access control: Repository and branch-level permissions matching Git workflows

Autonomy Tier Framework

- Read-only analysis: Code review, documentation generation, security scanning

- Suggestion mode: Generate pull requests requiring human approval

- Auto-commit: Rule-based approvals for low-risk changes (configuration, tests)

Governance integration:

KPMG's guidance recommends adopting "robust AI governance strategies to align with regulatory requirements and stakeholder expectations" through existing enterprise control integration rather than separate governance structures.

Step 6: Measure Impact and Iterate at Scale

Gartner emphasizes that "software engineering leaders must determine ROI and build a business case as they scale their rollouts." Systematic measurement prevents the common failure mode where AI tool deployments show no measurable business impact.

Quantified KPI Framework

| Metric Category | Baseline Target | Evidence Source |

|---|---|---|

| Task Completion Speed | 55% faster completion | GitHub research |

| Pull Request Throughput | 10.6% increase | Harness case study |

| Cycle Time Reduction | 3.5-hour improvement | Harness case study |

| Systematic Refactoring Speed | 5-10x improvement over manual coordination | Enterprise implementations |

Validated Measurement Approaches

Harness.io's analysis using their Software Engineering Insights platform documented 10.6% increase in pull requests and 3.5-hour reduction in cycle time through month-over-month comparative analysis.

Rollout Strategy

- Pilot phase: Single team, 2-week measurement baseline with AI coding assistants

- Expansion: 3-5 teams, comparative analysis across implementation approaches

- Organization-wide: Implementation with lessons learned integration

ROI calculation framework: LinearB's cost analysis establishes baseline costs at $19-39 per developer monthly. Break-even requires approximately 2-4 hours monthly productivity improvement per developer.

Common Pitfalls and How to Avoid Them

| Pitfall | Solution | Evidence Source |

|---|---|---|

| Technology-first deployment | Treat Al initiatives as process transformations requiring governance frameworks | DX Enterprise Analysis |

| Over-dependence on Al suggestions | Implement human oversight with confidence thresholds and mandatory review gates | Industry Research |

| Inadequate security controls | Establish comprehensive audit logging and non-extractable API architectures | ISO/IEC 42001 Framework |

| Missing ROI measurement | Establish baseline metrics before deployment and track comparative improvements | Gartner Implementation Guidance |

Best Practices for Effective Implementation

Context Enhancement

- Reference specific file paths and service boundaries in AI assistant prompts

- Maintain updated dependency graphs for cross-service impact analysis

- Implement repository-level access controls matching Git permissions

Security Implementation

- Use

.gitignorepatterns to exclude secrets and proprietary data - Deploy audit logging for all AI interactions and code modifications

- Start with read-only analysis before advancing to autonomous code generation

Governance Frameworks

- Begin with suggestion mode requiring human approval for all changes

- Implement confidence score thresholds for auto-commit capabilities

- Integrate with existing enterprise risk management and compliance processes

Measurement Strategy

- Establish productivity baselines before AI deployment for accurate ROI calculation

- Track developer satisfaction alongside quantitative productivity metrics

- Use comparative analysis across teams to validate implementation approaches

Accelerate Development with Remote AI Agent Capabilities

AI coding assistants represent the infrastructure layer for democratizing software engineering expertise across distributed teams. McKinsey warns that organizations lagging in systematic AI adoption risk competitive disadvantage as the technology transitions from early adoption to market standard.

The data supports strategic investment: 75% enterprise adoption projected by 2028, around 16% faster task completion in validated studies, and over 26% pull request throughput improvement in real-world deployments. However, successful deployment requires treating AI as an organizational process challenge rather than simple technology installation.

AI coding assistance is rapidly becoming standard practice across engineering organizations. Organizations implementing comprehensive governance frameworks, measurement strategies, and rollout processes establish sustainable productivity advantages over those deploying AI tools without strategic integration.

Implementation Timeline

Deploy enterprise AI coding assistants within 30 days using established enterprise deployment processes, establish measurement baseline within 60 days, achieve organization-wide rollout within 6 months. The 7.5x growth trajectory from 10% to 75% adoption over five years means early systematic implementation provides 2-3 years of competitive advantage before market saturation.

Start by auditing tribal knowledge and bottlenecks using the framework outlined above, then implement repository-wide AI capabilities systematically rather than as ad-hoc tool adoption. Focus on systematic bottleneck identification and governance framework establishment to achieve measurable productivity improvements and convert tribal knowledge into shared, queryable expertise distributed across development organizations.

Following this audit-to-scaling process helps avoid the common failure mode where 80% of AI tool deployments show no measurable business impact. The market data is clear: early strategic implementation of AI coding assistants with proper governance frameworks delivers sustainable competitive advantage in the rapidly evolving software development landscape.

Ready to scale AI workflows across your engineering teams? Explore Augment Code for enterprise-grade AI coding assistance with comprehensive repository context, advanced security controls, and proven productivity improvements.

Frequently Asked Questions

Written by

Molisha Shah

GTM and Customer Champion