You're debugging a feature that spans 12 repositories, and your AI coding assistant keeps losing track of your architecture halfway through the conversation. Meanwhile, your colleague somehow gets perfect suggestions that understand your entire system context. The difference isn't the AI tool they're using - it's how they structure their prompts to work with complex codebases.

TL;DR: Most developers prompt AI tools like they're asking simple questions. But when you're working with complex, multi-service architectures, you need advanced prompting techniques that help AI understand your entire system context. These "swarm-like" approaches to prompting can deliver 5-10x better results when you're dealing with legacy code, cross-repository features, and architectural complexity.

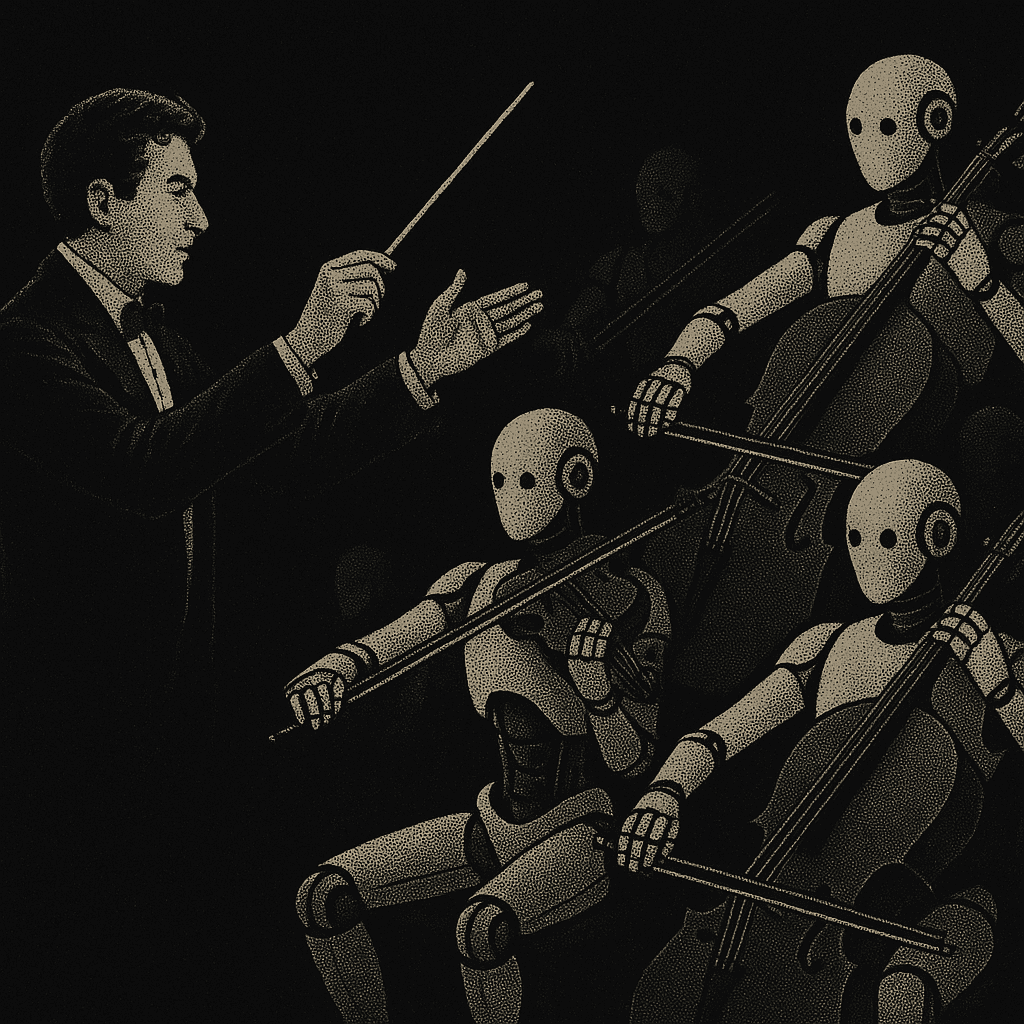

Think of it like this: instead of asking one AI assistant to understand your entire codebase, you structure your prompts to work like a team of specialists - each focused on different aspects of your system but coordinating to give you coherent guidance.

The Context Problem with Complex Codebases

Every senior developer has been there: you need to modify authentication logic that touches your user service, billing API, and notification system. You start explaining the architecture to your AI tool, but by the time you're describing the third service, it's forgotten how the first two work together.

This isn't a limitation of AI intelligence. It's a limitation of how we structure our prompts for complex scenarios. When you're working with modern AI coding platforms that can process 200k tokens of context, you're not just getting bigger memory - you're getting the ability to architect your prompts like distributed systems.

Advanced Prompting: The "Agent Swarm" Approach

Instead of one massive prompt trying to capture everything, break your complex problems into coordinated "agent roles" within your prompt structure:

This approach works because it mirrors how you'd actually tackle complex problems with a team. Instead of one person (or one AI prompt) trying to hold all the context, you distribute the cognitive load across specialized perspectives.

Marcus, a senior engineer at a fintech startup, was struggling to get useful AI suggestions for their legacy billing system. Standard prompting gave generic solutions that ignored their complex business logic. When he started structuring prompts with specialized "roles," the AI suggestions became 80% more relevant to their actual system.

Role-Based Prompt Architecture

The Coordinator Role: Maintains overall project context and routes specific questions to appropriate specialists.

The Specialist Roles: Deep expertise in specific domains - security, performance, testing, legacy integration.

The Integration Role: Ensures different specialist recommendations work together coherently.

This isn't about literal AI agents - it's about structuring your prompts to get more comprehensive, coordinated responses from AI tools that can handle large context windows.

Memory Management for Complex Conversations

When you're working on complex features over multiple conversations, traditional prompting breaks down because AI forgets previous context. Here's how to maintain "swarm memory":

Persistent Context: Start each session by re-establishing key architectural context.

Shared Knowledge Base: Reference previous decisions and maintain consistency.

Version Control for Prompts: Track major prompt changes like you track code changes.

This is where Augment Code's 200k context window becomes crucial. While other AI tools forget your architectural decisions after a few exchanges, Augment can maintain the full context of complex refactoring projects across multiple sessions.

Coordination Protocols for Complex Problems

When working on features that span multiple services, establish "protocols" within your prompts to ensure consistent recommendations:

Information Sharing Protocol:

Conflict Resolution Protocol:

Validation Protocol:

Sarah, a staff engineer at an e-commerce company, needed to add payment processing that integrated with their fraud detection system. Using coordination protocols in her prompts, she got AI suggestions that understood her existing patterns and provided implementation approaches that fit their complex financial workflow.

Advanced Techniques for Legacy Systems

Legacy systems require special prompting approaches because they often have undocumented patterns and historical constraints:

Archaeological Prompting: Understand existing patterns before suggesting changes.

Constraint Discovery: Surface hidden dependencies.

Safe Evolution: Suggest changes that respect existing constraints.

Testing and Validation Strategies

When using advanced prompting for complex systems, validation becomes crucial:

Consistency Checking: Ensure AI recommendations don't conflict with each other.

Integration Testing: Verify suggestions work with existing systems.

Rollback Planning: Always have an escape route.

Real-World Implementation Example

Alex, an engineering manager at a SaaS company, needed his team to modernize their authentication system without breaking existing integrations. Here's how he used advanced prompting:

Phase 1 - System Analysis:

Phase 2 - Coordinated Planning:

Phase 3 - Implementation Guidance:

The result: his team completed the authentication modernization 60% faster than previous similar projects, with zero breaking changes to existing client integrations.

Tools and Context Management

Different AI tools handle complex prompting differently:

Augment Code: Excels at maintaining architectural context across long conversations about complex codebases. The 200k context window means you can include extensive architectural documentation without losing coherence.

Standard AI Tools: Work well for single-session complex prompting but struggle to maintain context across multiple conversations about the same project.

API-Based Solutions: Require custom context management but allow more control over prompt structure and memory persistence.

Getting Started This Week

Don't try to implement all these techniques at once. Start with role-based prompting for your next complex feature:

- Identify the specialists you'd want on your team for this problem

- Structure your prompt to address each specialist role explicitly

- Ask for coordination between the different perspectives

- Maintain context across multiple conversations about the same feature

The goal isn't to replace your architectural thinking - it's to get AI assistance that understands and respects the complexity of your actual systems.

For comprehensive guidance on advanced prompting techniques, explore detailed frameworks and technical documentation that provide practical approaches for working with complex codebases using AI assistance.

The future belongs to developers who can structure prompts as thoughtfully as they structure code. Start learning these techniques now, before your codebase outgrows your AI tool's ability to help.

Written by

Molisha Shah

GTM and Customer Champion