Most arguments about monorepo versus polyrepo miss the point. People treat it like a religious debate, when it's actually more like choosing between living in a city or the suburbs. The right answer depends on who you are and what you're optimizing for.

But here's what's interesting. AI coding assistants with massive context windows are changing this calculation in ways nobody predicted. Not because they make one choice obviously better, but because they shift which trade-offs matter.

Think about what happens when a developer debugging an authentication bug can see the auth service, the API gateway, and three different client implementations in the same context window. That's fundamentally different from having to grep through five separate repositories, each with its own mental model. The AI can actually understand how these pieces fit together, not just pattern-match against similar code it's seen before.

This matters more than it sounds like it should.

What We Thought We Knew

Before AI tools got good at understanding large codebases, the monorepo versus polyrepo choice came down to a pretty clear set of trade-offs.

Monorepos gave you atomic changes across services. Uber's iOS team switched to a monorepo specifically to make this easier. When you need to update an API contract and all its clients at once, having everything in one repo means one commit, one review, one deploy. No coordinating pull requests across six different repositories, no version compatibility matrices, no deployment ordering dependencies.

You also got unified dependency management, which sounds boring until you've spent a week tracking down why service A works fine but service B crashes, only to discover they're using different versions of the same library with subtly incompatible behavior.

The downside was build complexity. Once your monorepo hits a certain size, building everything becomes a serious infrastructure problem. The New Stack describes this well: you need to treat your build system like horizontally scalable infrastructure. That's not a weekend project.

Polyrepos gave you the opposite trade-offs. Each service stayed small and comprehensible. Teams could move fast without coordinating with everyone else. You could deploy service A twenty times while service B stayed stable for months. Different teams could use different tech stacks if that made sense.

But cross-service debugging became painful. Following a request through six different services meant opening six different repositories, each with its own logging format and development setup. Integration testing required coordinating the state of multiple repos. And every team implemented CI/CD slightly differently, which seemed fine until you needed to standardize security scanning across fifty repositories.

None of this was wrong. These trade-offs were real. But they were based on an assumption about how hard it is to understand code across service boundaries.

What Actually Changed

AI assistants with large context windows break that assumption in a specific way. They don't eliminate the trade-offs between monorepo and polyrepo. They change which trade-offs matter most.

Here's why. When a human developer works across service boundaries in a polyrepo, they're context-switching between different mental models. They need to remember how the auth service structures its responses, how the API gateway transforms them, and how the client expects them. Each context switch has a cost.

AI assistants handle this differently. GitHub Copilot now has a 64,000 token context window. Cursor works with models that handle even more. That's enough to fit multiple services' core logic in a single context.

When you ask an AI assistant to help refactor an API endpoint, it can see both sides of the contract at once. It understands how changing the auth service will affect the three clients that depend on it. Not because it's magic, but because it can literally read all that code at the same time.

This tips the scales toward monorepos in a way that's easy to underestimate. The main historical argument for polyrepos was that services are easier to understand when they're isolated. But if your AI assistant can understand cross-service dependencies anyway, that benefit matters less.

However, and this is the interesting part, it doesn't make monorepos obviously better. Because the build complexity problem didn't go away. If anything, it got harder. Now you need build systems that can both scale horizontally and provide structured information to AI tools about what depends on what.

Nx figured this out early. They built Model Context Protocol servers specifically so AI assistants could understand project relationships. That's not a feature you add as an afterthought. It's baked into how the tool works.

The Counterintuitive Part

Here's what surprised teams actually using AI assistants with different repository structures. The biggest benefit wasn't faster coding. It was better understanding of what already exists.

Senior developers spend more time reading code than writing it. When working in a monorepo, an AI assistant with enough context can answer questions like "where else do we handle authentication errors?" by actually reading the codebase, not just keyword-matching. It can tell you "these three services all handle it differently, and here's why."

That's genuinely useful. More useful than autocomplete, honestly.

But it creates a weird pressure on repository architecture. The more services you have in separate repositories, the less any single AI interaction can tell you about the system as a whole. You can still ask questions, but the answers are narrower. Less useful.

This pushes toward monorepos for reasons that have nothing to do with traditional monorepo benefits like atomic commits. You want a monorepo because your AI assistant is more helpful when it can see more.

Yet polyrepos aren't dying. Uber's Android team went with a monorepo, but plenty of successful engineering orgs stick with polyrepos. Why?

Because team autonomy turns out to matter more than people thought. When fifty engineers all work in the same repository, you need agreement on testing frameworks, code style, deployment processes, everything. That coordination overhead is real. It slows teams down in ways that better AI tools don't fix.

With polyrepos, teams can move independently. They can experiment with new tools without getting consensus from forty other developers. They can deploy five times a day or once a month without affecting anyone else. That flexibility has value that AI assistants don't replace.

What This Means in Practice

So where does this leave engineering teams trying to choose?

If your services talk to each other constantly and you're already coordinating changes across them, monorepos make more sense now than they did before AI tools. The cross-service visibility that AI assistants provide is genuinely valuable. You'll need to invest in build infrastructure. Bazel or Nx or something similar. But the payoff is that your AI assistant becomes much more useful.

Augment Code handles 200k tokens, which is enough for pretty substantial codebases. That's not academic. It's the difference between an AI assistant that can help you understand a subsystem versus one that understands how subsystems interact.

If your services are more loosely coupled, or if team autonomy matters more than cross-service coordination, polyrepos still make sense. AI tools help here too, but differently. They make each individual repository easier to work with. They don't solve the cross-repository coordination problem, because that's not really a code understanding problem. It's an organizational problem.

There's a third option that more teams are discovering. Core services in a monorepo, experimental or auxiliary services in separate repos. This hybrid approach gives AI assistants good context for the parts of the system that matter most while preserving team autonomy for everything else.

The Hedge Foundation actually measured this when they migrated. Build times dropped 60%. Developer productivity went up 40%. That's not marketing fluff. Those are real numbers from real teams.

But here's the thing about those numbers. They came from fixing real problems the team had. The monorepo helped because their services were tightly coupled and they were spending too much time coordinating changes. If your services aren't tightly coupled, you won't see those gains.

The Build System Problem

This brings us to the part nobody wants to think about but everyone has to deal with. Build systems.

If you're going with a monorepo and you have more than a dozen services, you need a serious build system. Not Makefile-based scripts held together with shell scripts and hope. Something designed to handle complex dependency graphs at scale.

Bazel does this. Airbnb migrated to Bazel and documented the whole thing. It wasn't easy. But it worked, and now their builds are both faster and more reliable.

Nx takes a different approach. Instead of trying to be language-agnostic like Bazel, it focuses on JavaScript/TypeScript and provides deep integration with AI tools. It can tell AI assistants about project structure and dependencies in a way they can actually use.

This matters more than you'd think. An AI assistant that understands your build graph can suggest refactorings that wouldn't break anything. One that doesn't is just guessing based on static analysis.

The polyrepo equivalent is simpler but less powerful. Each repo has its own build process, probably something standard for the language. No need for sophisticated caching or dependency analysis. But no way to optimize across repositories either.

Migration Is Messier Than You Think

Teams that migrate from polyrepo to monorepo or vice versa consistently underestimate how messy it gets.

Graphite's migration guide walks through the process step by step. You need to preserve git history. You need to reorganize directory structures. You need to merge different CI/CD configurations. And you need to do all of this without breaking production.

AI assistants help with some of this. They're good at suggesting how to reorganize code and updating import paths. They're less good at the organizational parts, like deciding which team owns which directory or how to handle merge conflicts when two repos used the same filename.

The real challenge isn't technical. It's coordination. Everyone needs to switch at once, which means everyone needs to be convinced it's worth the disruption. That's hard when different teams have different problems and different priorities.

Some teams solve this by not doing a full migration. They move their core services to a monorepo but leave peripheral stuff in separate repos. This is messy from an architectural purity standpoint but often works fine in practice.

What Nobody Tells You

Here's something most discussions of monorepo versus polyrepo leave out. The choice matters less than how committed you are to making it work.

Teams that succeed with monorepos invest in build infrastructure, establish clear coding standards, and maintain discipline about not turning the repo into a dumping ground for everything. Teams that succeed with polyrepos invest in service contracts, establish clear ownership boundaries, and maintain discipline about not creating tight coupling between services.

The AI part changes the ratio slightly in favor of monorepos by making cross-service understanding easier. But it doesn't change the fundamental requirement that you need to commit to making whichever approach you choose work well.

Augment Code can help you work more effectively in either architecture. The 200k token context window means it can understand a substantial monorepo or coordinate across several polyrepo services. But it can't fix organizational dysfunction or compensate for neglected build infrastructure.

What it can do is make the code understanding problem easier. And that turns out to be valuable enough that it's worth factoring into your architecture decisions.

The Broader Pattern

There's a pattern here that extends beyond just repository architecture. AI tools are changing how we think about complexity in software systems.

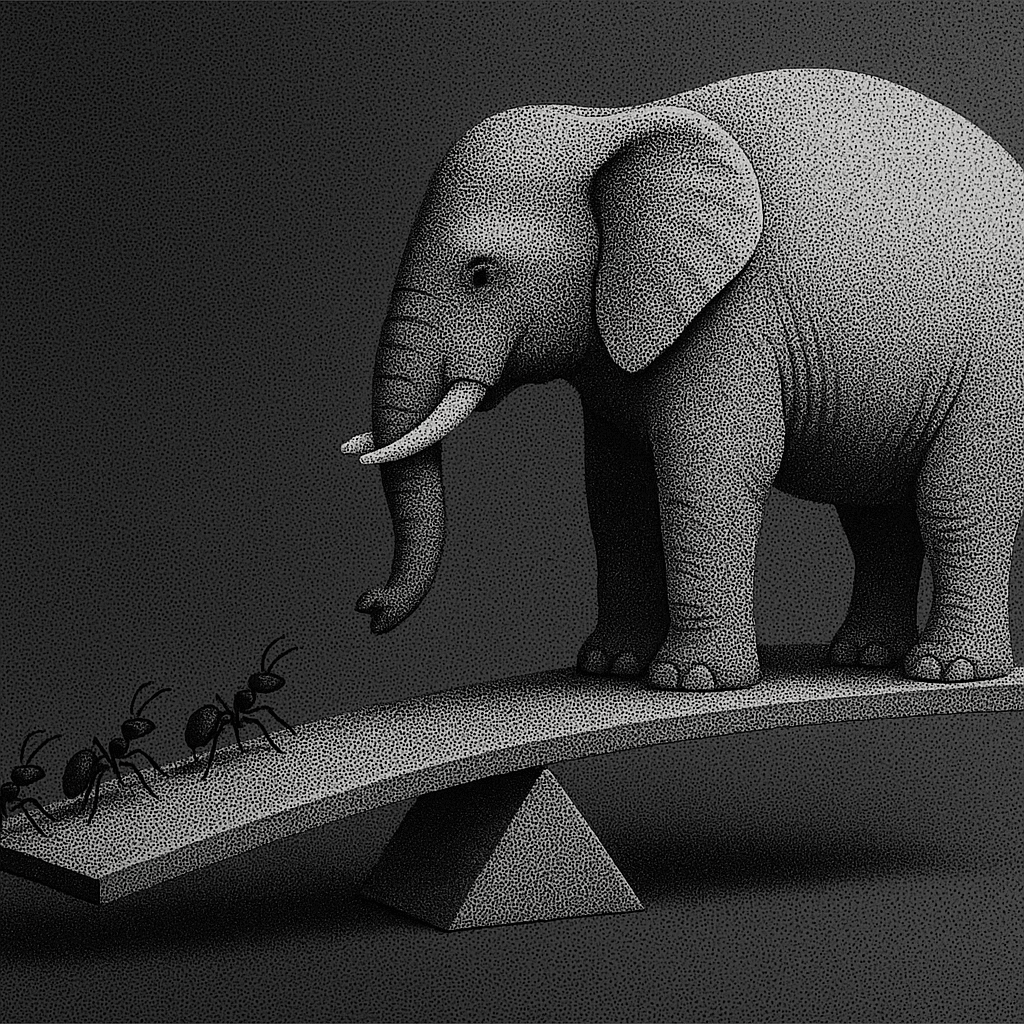

Historically, we dealt with complexity by decomposing it. Break big systems into smaller pieces that you can understand independently. That's the whole microservices philosophy. It's why polyrepos made sense.

But AI assistants with large context windows give us another option. Not decomposing complexity, but making complexity more navigable. You still have a large system, but the tools for understanding it are better.

This doesn't make decomposition wrong. Sometimes you really do need to break things into smaller pieces. But it does mean the trade-offs are different. The cost of keeping things together is lower than it used to be, because the tools for understanding large systems are better.

That's going to affect more than just whether you use a monorepo. It's going to affect how big you let services get before splitting them, how much shared code you tolerate, how you think about abstraction boundaries. All the decisions that involve "how much complexity can developers handle?"

The answer to that question is changing. Not because developers are getting smarter, but because their tools are getting better at helping them navigate complexity.

That's the real story here. Not monorepo versus polyrepo, but how we're learning to work with complex systems in a world where AI assistants can help us understand what's going on.

Teams that figure this out early will move faster than their competitors. Not because they have better AI tools, though that helps. But because they've learned to structure their code in ways that AI tools can work with effectively.

That's not about choosing the right repository architecture. It's about understanding how AI changes what's possible and making architectural decisions accordingly.

If you're working on a large codebase and haven't tried Augment Code yet, that's worth doing. The difference between an AI assistant that understands 8,000 tokens and one that handles 200,000 tokens isn't incremental. It's a different category of tool. One helps you autocomplete. The other helps you understand.

And in the end, understanding is what makes the difference between code that works and systems you can actually maintain.

Written by

Molisha Shah

GTM and Customer Champion