TL;DR

Retrieval-Augmented Generation fails on enterprise codebases because vector similarity cannot capture architectural dependencies across 100,000+ files, missing critical relationships that break migrations. This analysis demonstrates Cache-Augmented Generation's systematic approach—loading complete subsystems into million-token context windows—validated through Airbnb's 75% automation rate on production React migrations.

You're a senior engineer at a company with a massive Rails codebase. Your team needs to migrate the authentication system to a new microservice. You fire up your AI coding assistant, expecting it to understand the intricate web of controllers, middleware, database models, and configuration files that make authentication work.

Instead, the AI gives you a basic login form. It missed the OAuth integrations, the complex permission hierarchies, the audit logging, and the session management that took your team years to build. Why? Because it was reading random paragraphs from your codebase instead of understanding the whole story.

This is the fundamental problem with Retrieval-Augmented Generation (RAG) for code. Everyone's trying to make retrieval smarter, but what if retrieval is the wrong approach entirely?

Here's what most people don't realize: the future of AI code assistance isn't better search. It's no search at all.

The Retrieval Trap

Think about how RAG works. You ask a question, the system searches through chunks of your code, finds the most "similar" pieces, and feeds them to an AI model. This sounds reasonable until you actually try it on a real codebase.

Picture trying to understand a novel by reading random pages. You might get lucky and find pages that mention the main character, but you'll miss the plot threads, the character development, and the subtle connections that make the story coherent. That's exactly what RAG does to your code.

The problem gets worse with enterprise codebases. In a monorepo with 100,000 files, "similar" doesn't mean "related." A payment processing module might break when you change error handling in a completely different service, but vector similarity can't capture that relationship.

Here's the kicker: as codebases get more complex, RAG gets worse, not better. More code means more irrelevant chunks to sift through. More dependencies mean more ways for the system to miss critical connections.

What Everyone Gets Wrong About Context

The conventional wisdom says you need smarter retrieval. Better embeddings, more sophisticated search algorithms, hybrid approaches that combine multiple retrieval methods. The entire AI industry is racing to solve this problem.

But what if they're solving the wrong problem?

Cache-Augmented Generation (CAG) takes a radically different approach. Instead of getting better at finding needles in haystacks, it throws out the haystack entirely. CAG loads your entire codebase, or the relevant parts of it, directly into the AI's memory before it starts working.

This sounds crazy expensive until you realize something interesting about modern AI models. Context windows have exploded. Claude now supports 1 million tokens. That's enough for about 75,000 lines of code in a single request.

Suddenly, loading your entire authentication system into memory isn't just possible. It's cheaper than making dozens of retrieval calls.

How CAG Actually Works

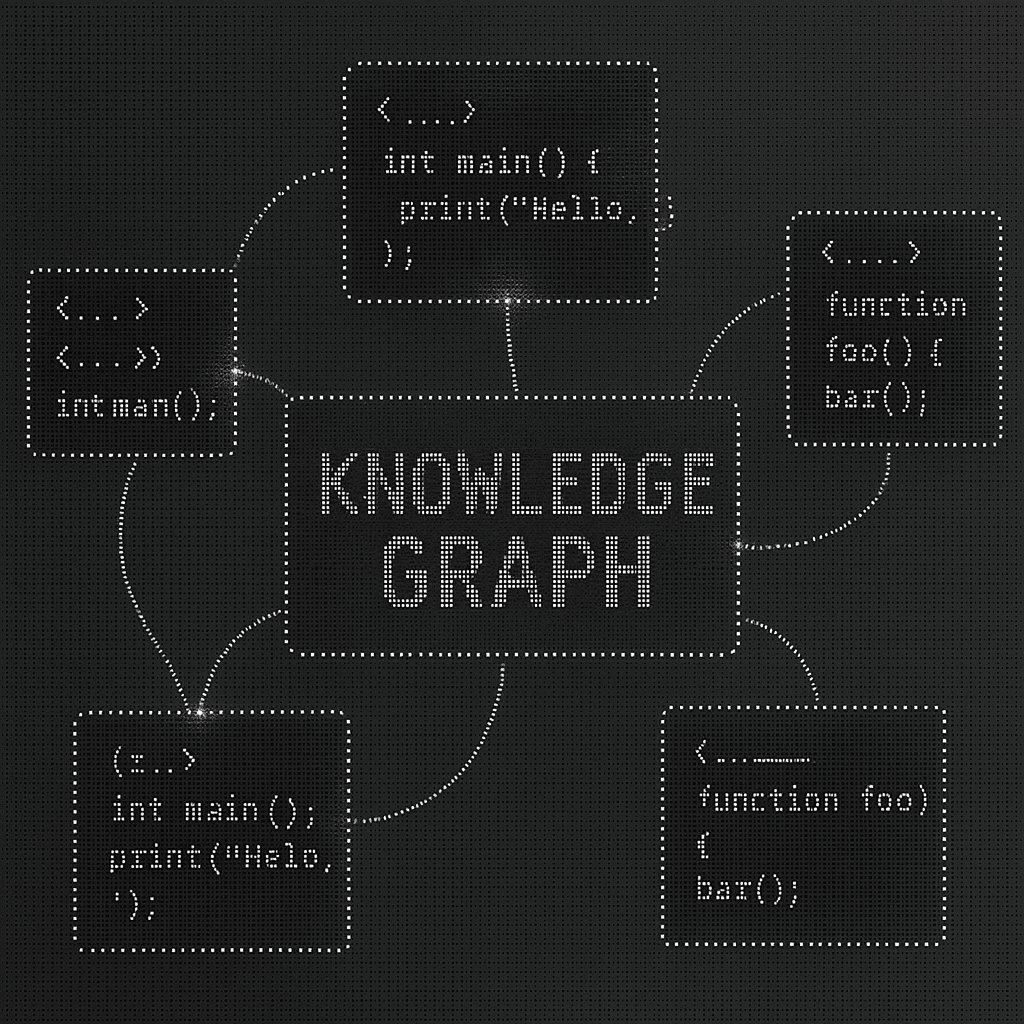

The process is surprisingly simple. Instead of chunking your code and shoving it into a vector database, CAG builds what researchers call Programming Knowledge Graphs. Think of it as a map of how everything in your codebase connects to everything else.

When you ask CAG to refactor your authentication system, it doesn't search for auth-related code. It already has the complete picture: every function call, every import, every configuration file, every test. It knows that changing the User model will affect the AuthController, which will affect the middleware, which will affect the API endpoints.

The workflow looks like this:

- CAG processes your entire codebase into a dependency graph. This happens once, upfront, and takes minutes instead of hours.

- When you make a request, it loads all the relevant context into the AI's memory. No searching, no retrieval, no missing pieces.

- Finally, it generates code with complete understanding of your system. One shot, with everything it needs to know already loaded.

Compare this to RAG, which might make five or ten retrieval calls as it discovers what it doesn't know. Each call adds latency. Each call risks missing something important.

The Economics of Understanding

Here's where it gets interesting. CAG costs more per request than RAG. Token pricing means loading 200,000 tokens into context isn't free. But what's the real cost of a broken migration because your AI missed a critical dependency?

Think about it this way. Would you rather pay a developer to write code by giving them access to the entire codebase, or by showing them random files and hoping they can piece together how everything works?

The answer seems obvious when you put it like that. But that's exactly the choice between CAG and RAG.

Early implementations suggest CAG dramatically reduces the need for back-and-forth iterations. When the AI understands your entire system upfront, it gets things right the first time more often. The higher per-request cost gets offset by fewer requests overall.

Airbnb achieved 75% automation rates using comprehensive context analysis for their React Testing Library migration. That's not because they had better retrieval. It's because they gave their tools complete understanding of what they were working with.

Why This Matters Now

Three things happened recently that make CAG practical:

- Context windows got huge. A million tokens used to be science fiction. Now it's table stakes for frontier models. You can fit substantial portions of enterprise codebases into a single request.

- Token prices collapsed. Competitive pressure drove costs down to the point where loading massive context windows is economically viable for regular development work.

- Models got better at handling long contexts. Early large language models would lose track of information buried in long prompts. Modern models maintain accuracy across extended contexts.

These changes didn't just make CAG possible. They made it inevitable.

The Surprising Truth About Hallucinations

Here's something counterintuitive: giving AI models more context actually reduces hallucinations, not increases them.

When an AI doesn't have complete information, it fills in the gaps. It makes educated guesses about how your authentication system works based on patterns it learned during training. Some of those guesses are wrong.

When an AI has complete information, it doesn't need to guess. It can see exactly how your system works and generate code that fits your existing patterns.

This flies in the face of conventional wisdom that says more context leads to more confusion. But code isn't natural language. Code has structure, dependencies, and logic that AI models can follow precisely when they have the complete picture.

Real Systems, Real Results

Let's get concrete. You're migrating authentication from Rails to Node.js. Here's what each approach actually does:

RAG finds your AuthController and User model because they match "authentication" semantically. It generates a basic Node.js service that handles login and logout. But it misses the OAuth configurations buried in initializers, the complex role-based permissions scattered across multiple modules, and the audit logging that compliance requires.

You spend days fixing the gaps, tracking down missing pieces, and debugging integration issues that could have been avoided.

CAG loads your entire authentication subsystem upfront. It sees the AuthController, the User model, the OAuth configurations, the permission modules, the audit logging, the test suites, and the deployment configurations. It generates a Node.js service that accounts for all of these pieces.

The difference isn't just quality. It's whether your AI assistant actually understands the system it's working with.

When RAG Still Makes Sense

This doesn't mean RAG is dead. RAG works well for certain scenarios:

When you're querying rapidly changing documentation that can't be preloaded. When you're building tools that work across many different codebases. When you're working with simple, isolated queries that don't require deep system understanding.

But for complex enterprise development, where understanding relationships between components matters more than finding individual pieces, CAG provides something RAG can't: genuine comprehension.

The Bigger Picture

This isn't really about retrieval versus caching. It's about how AI systems understand complex, interconnected information.

Most AI applications today work by breaking complex problems into simple pieces, processing those pieces separately, then trying to reassemble the results. This works when the pieces are independent. It fails when understanding requires seeing the whole system.

Code is like any complex system. You can't understand how a city works by looking at individual buildings. You need to see the roads, the utilities, the zoning patterns, and how they all connect.

The same logic applies beyond code. Financial systems, supply chains, organizational structures. Any domain where relationships between components matter more than the components themselves.

What Comes Next

The shift from RAG to CAG represents something bigger than a new technique for code generation. It's a move toward AI systems that can understand complex, interconnected domains the way humans do: by seeing the whole picture instead of assembling fragments.

This changes what's possible with AI assistance. Instead of tools that help you with isolated tasks, you get tools that understand your entire system and can reason about it holistically.

For enterprise development teams, this means AI that can actually help with the hardest problems: large-scale refactoring, architectural migrations, and system-wide optimizations that require understanding how everything connects.

The future of enterprise code generation isn't smarter search. It's systems that understand your code the way you do: completely, contextually, and all at once.

Ready to experience what comprehensive code understanding looks like? Augment Code has built the first production implementation of Cache-Augmented Generation for enterprise development teams. See how context changes everything.

Frequently Asked Questions

Written by

Molisha Shah

GTM and Customer Champion