Enterprise engineering teams wrestle with an automation challenge that manual oversight simply cannot solve. Security vulnerabilities (51%) and data privacy compliance gaps (41%) now outrank budget constraints as the top roadblocks preventing teams from hitting 2025 delivery targets, according to RevealBI's latest developer survey. The root cause? Modern codebases have exploded in complexity. Context engines now index up to 500,000 files and process 200,000 tokens in a single reasoning pass, numbers that overwhelm human review cycles and break file-level assistive tools.

The measurable payoff for delegating complete workflows to autonomous software agents is significant. Direct comparisons show autonomous tooling completing complex, multi-file tasks two to three times faster than their assistive counterparts, while opening fully-formed pull requests ready for review. These gains compound across repetitive maintenance tasks like dependency bumps, security patches, and large-scale refactors, cutting thousands of hours enterprises typically sink into manual coordination.

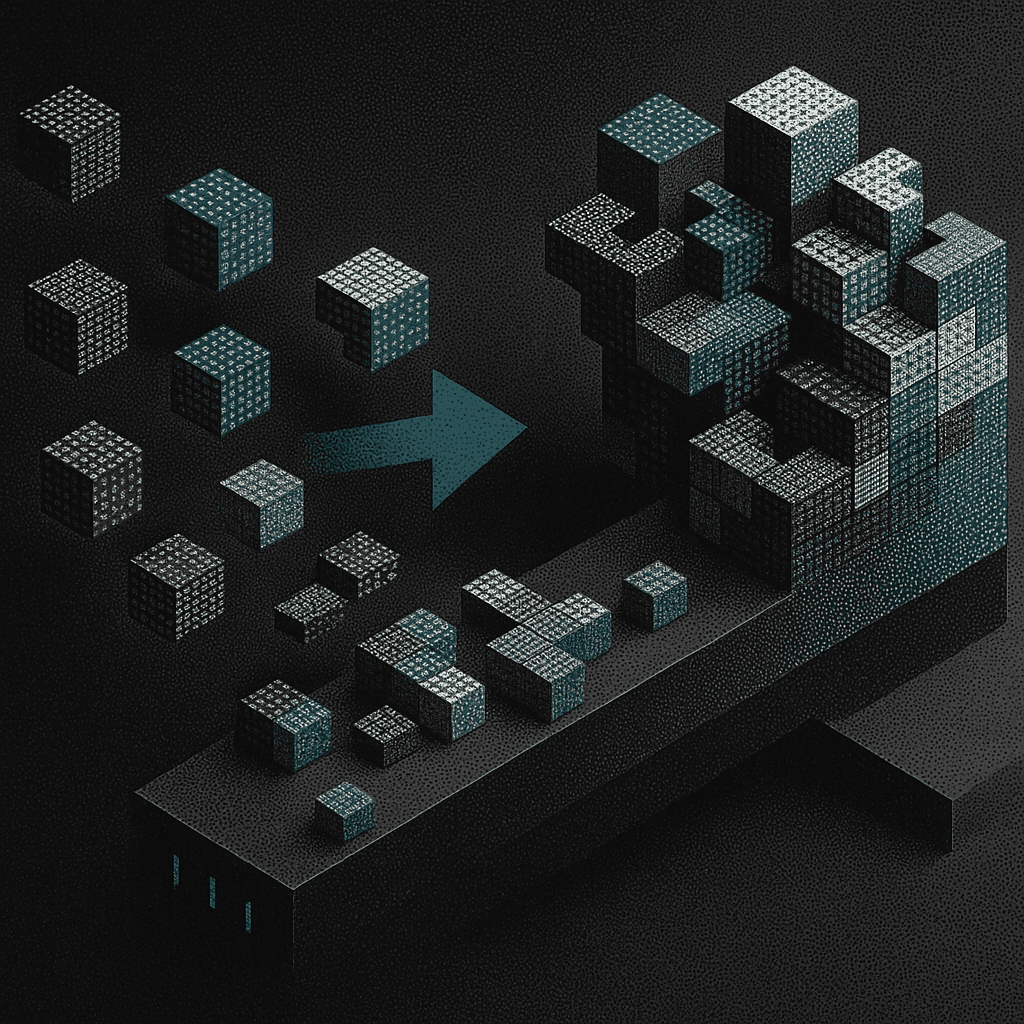

What Makes Autonomous Agents Different From Assistive Tools?

Autonomous agents execute complete workflows without human prompts. They ingest requirements, reason about cross-service dependencies, and implement changes end-to-end while engineers monitor outcomes rather than guide each individual step. Salesforce's agent research defines this clearly: autonomous systems "complete tasks without human prompts" while assistive agents "augment specific human actions."

Augment Code demonstrates this autonomy in practice. Their agents plan implementations, generate code, and open pull requests across entire codebases without requiring keyboard input after the initial job trigger. GitHub Copilot operates reactively, surfacing suggestions only after developers begin typing, with every code acceptance, file navigation, and commit requiring manual approval.

This architectural difference fundamentally reshapes how work flows through engineering organizations:

The operational difference becomes critical at enterprise scale. Teams managing distributed monorepos, dozens of microservices, and regulated release pipelines discover that manual oversight bottlenecks grow exponentially with complexity.

How Do These Approaches Compare Across Critical Enterprise Factors?

Eight factors determine where autonomous agents deliver measurable value over assistive tooling. These criteria reflect enterprise engineering realities: context gaps in sprawling repositories, governance inconsistencies in regulated industries, and extended onboarding cycles for large teams.

1. Degree of Automation

Autonomous agents eliminate context switches by executing complete task workflows. Augment Code's live demonstrations show updates across multiple services with zero human intervention after job initialization. The agent analyzes tickets, generates code, opens pull requests, and tags reviewers in one uninterrupted flow.

Assistive tools require human decision-making at every step. Developers must accept suggestions, navigate between files, commit changes, and trigger deployments manually. This creates coordination overhead that scales linearly with repository count.

Winner: Autonomous platforms provide true hands-free execution that scales with compute rather than human resources.

2. Workflow Coverage

Enterprise development spans issue trackers, CI/CD pipelines, chat systems, and change management processes. Augment Code's SequentialWorkflow engine connects these systems natively, triggering jobs from Jira, generating branches, executing tests, updating documentation, and posting status updates to Slack without manual handoffs.

Assistive tools operate primarily within IDEs. GitHub Copilot cannot kick off deployments, file follow-up tickets, or coordinate changes across multiple repositories. Engineers must build custom scripts to connect these workflow stages, often creating brittle integration points.

Winner: Autonomous systems provide comprehensive workflow coverage through native multi-system orchestration.

3. Context Understanding

Augment Code's context engine indexes nearly 500,000 files across repositories. For each query, it retrieves relevant subsets of code and can process up to 200,000 tokens, capturing architectural relationships, API contracts, and historical commit context that span entire codebases.

GitHub Copilot operates within 4,000-8,000 token windows, seeing only the active file and immediate surrounding context. In large monorepos, this narrow scope leads to hallucinated imports, outdated API references, and code that compiles locally but breaks integration tests.

Winner: Autonomous tools equipped with high-capacity context engines deliver superior comprehension of complex, interconnected codebases.

4. Scalability

Salesforce research confirms that autonomous systems scale "with minimal marginal cost" while assistive tools scale "with human resources." Each new repository monitored by assistive plugins adds review cycles, approval workflows, and coordination overhead. Throughput increases linearly at best.

Autonomous agents process additional services in parallel once connected. Augment Code demonstrates simultaneous updates across 100,000+ files during version bumps without proportional increases in human oversight.

Winner: Autonomous platforms scale elastically in both infrastructure and coordination dimensions.

5. Quality and Governance

Autonomous tooling requires robust governance controls due to higher blast-radius potential. Augment Code operates under ISO/IEC 42001 and SOC 2 Type II certifications, enforcing auditable change logs, role-based approvals, and automated rollback mechanisms for every generated change.

Assistive tools delegate quality judgment to individual developers, offering lint rules but no systematic guardrails. Compliance evidence must be assembled manually across multiple tools and processes.

Winner: Certified autonomous platforms provide measurable quality assurance and governance automation that satisfies enterprise audit requirements.

6. Security and Compliance

Augment Code processes analysis through non-extractable APIs with customer-managed encryption keys, keeping source code within controlled security perimeters. Every generated pull request passes automated security scanning and dependency vulnerability checks before merge approval.

GitHub Copilot's cloud-based inference sends code snippets to external servers, raising data residency concerns that regulatory frameworks in healthcare, finance, and government sectors cannot accommodate without extensive legal review.

Winner: Enterprise-designed autonomous platforms deliver superior data sovereignty and continuous policy enforcement.

7. Developer Productivity and Onboarding

Augment Code reports task completion speeds 5-10x faster on multi-file changes and dramatically reduces onboarding time. New hires query the context engine for architectural rationale instead of manually exploring unfamiliar repository structures.

GitHub Copilot's 55% speed improvement applies primarily to isolated function creation but diminishes when work spans multiple packages or services. New developers still navigate complex codebases manually, prolonging time-to-first-meaningful-contribution.

Winner: Both approaches help, but autonomous systems yield larger team-level productivity gains, especially during legacy system onboarding.

8. Technical Debt Management

Large-scale refactoring typically consumes entire quarters because touching thousands of files risks introducing regressions. Autonomous agents can identify deprecated patterns, generate consistent replacements, and coordinate pull requests across 100,000+ files, transforming quarter-long initiatives into managed background processes.

Assistive tools handle individual files, requiring developers to manually orchestrate refactoring sweeps, track progress across repositories, and resolve merge conflicts. Strategic technical debt reduction remains largely manual.

Winner: Autonomous platforms systematize large-scale debt reduction beyond the practical reach of assistive tooling.

What's the ROI for Enterprise Teams?

Autonomous agents deliver measurable returns when repository complexity and team coordination requirements outpace manual oversight capabilities. Organizations crossing the 50-engineer threshold find that context sharing becomes the primary constraint on release velocity, not individual coding speed.

Onboarding Impact: Augment's 200,000-token context engine enables new hires to query entire codebases in single prompts, from service boundaries to commit history. This eliminates typical multi-day architecture exploration periods and accelerates time-to-first-pull-request for every team member.

Compliance Cost Reduction: Augment ships with SOC 2 Type II and ISO/IEC 42001 controls built-in, removing legal review cycles, proxy configurations, and security workarounds that enterprises layer onto consumer-grade tools. Consolidating context search, code generation, and security verification reduces both vendor management complexity and integration overhead.

Scaling Economics: Autonomous workflow automation reduces coordination overhead without proportional salary increases. Teams managing hundreds of microservices, facing aggressive compliance deadlines, or executing rapid hiring plans see demonstrable ROI improvements across the entire engineering organization.

Smaller teams working on greenfield codebases with clean service boundaries often find assistive autocomplete sufficient. The economic case for autonomy strengthens with architectural complexity and regulatory requirements.

When Should You Choose Autonomous vs. Assistive Approaches?

Three factors determine the optimal automation level, based on patterns from enterprise deployments:

Codebase Complexity Drives the Decision

Legacy monorepos with cross-service dependencies break assistive tools operating on limited context windows. When refactors span dozens of services and thousands of files, only autonomous agents with 200,000-token context windows can reason across entire dependency graphs and generate working implementations.

Microservices with well-defined boundaries and comprehensive test suites work effectively with IDE autocomplete and targeted linting. Clean architectures reduce the context requirements that favor autonomous systems.

Team Structure Determines Adoption Velocity

Junior-heavy teams benefit immediately from assistive suggestions with senior review gates, reducing risk while building development skills. Senior-heavy teams hit bottlenecks when every autonomous pull request requires detailed manual review.

The tipping point occurs when code review overhead exceeds the time savings from automation. Teams with established code quality processes can safely delegate more decisions to autonomous systems.

Scale Breaks Manual Coordination

Once engineering headcount exceeds 50 developers, context sharing becomes the limiting factor for development velocity. Manual handoffs between teams, outdated documentation, and tribal knowledge create deployment delays that grow exponentially with team size.

Autonomous systems that persist context across development cycles maintain velocity regardless of organizational complexity.

Implementation Strategy

Deploy assistive tools within development environments first. Measure accuracy rates and developer adoption patterns. Graduate high-confidence workflows like dependency updates, security patches, and routine refactors to autonomous execution based on success criteria.

Regulated environments require additional filtering. Choose autonomous platforms with established compliance certifications that satisfy audit requirements without custom legal review.

Complete Workflow Automation Delivers Measurable Enterprise Value

Testing autonomous versus assistive approaches across eight technical criteria revealed autonomous workflow automation outperformed assistive tooling in seven areas: end-to-end execution, cross-repository coverage, large-context processing, elastic scalability, certified quality controls, enterprise security, and systematic technical debt reduction.

Assistive agents maintained advantages only in immediate adoption, where developers can integrate suggestions without modifying existing build pipelines or approval processes.

For engineering organizations running complex monorepos, strict governance requirements, and aggressive release schedules, the evidence favors autonomous systems. Larger context windows, persistent architectural memory, and ISO-compliant processes correlate directly with measurable improvements: higher commit velocity, fewer production regressions, and automated audit trail generation.

The implementation path involves controlled deployment: deploy autonomous agents on repository subsets, measure throughput changes, track defect rates, and monitor compliance overhead before expanding organization-wide.

Ready to see how autonomous workflow automation can accelerate your team's development velocity? Experience Augment Code's context-aware agents and end-to-end automation capabilities. Start with a pilot deployment to measure the impact on your most complex, multi-repository workflows.

Written by

Molisha Shah

GTM and Customer Champion