TL;DR

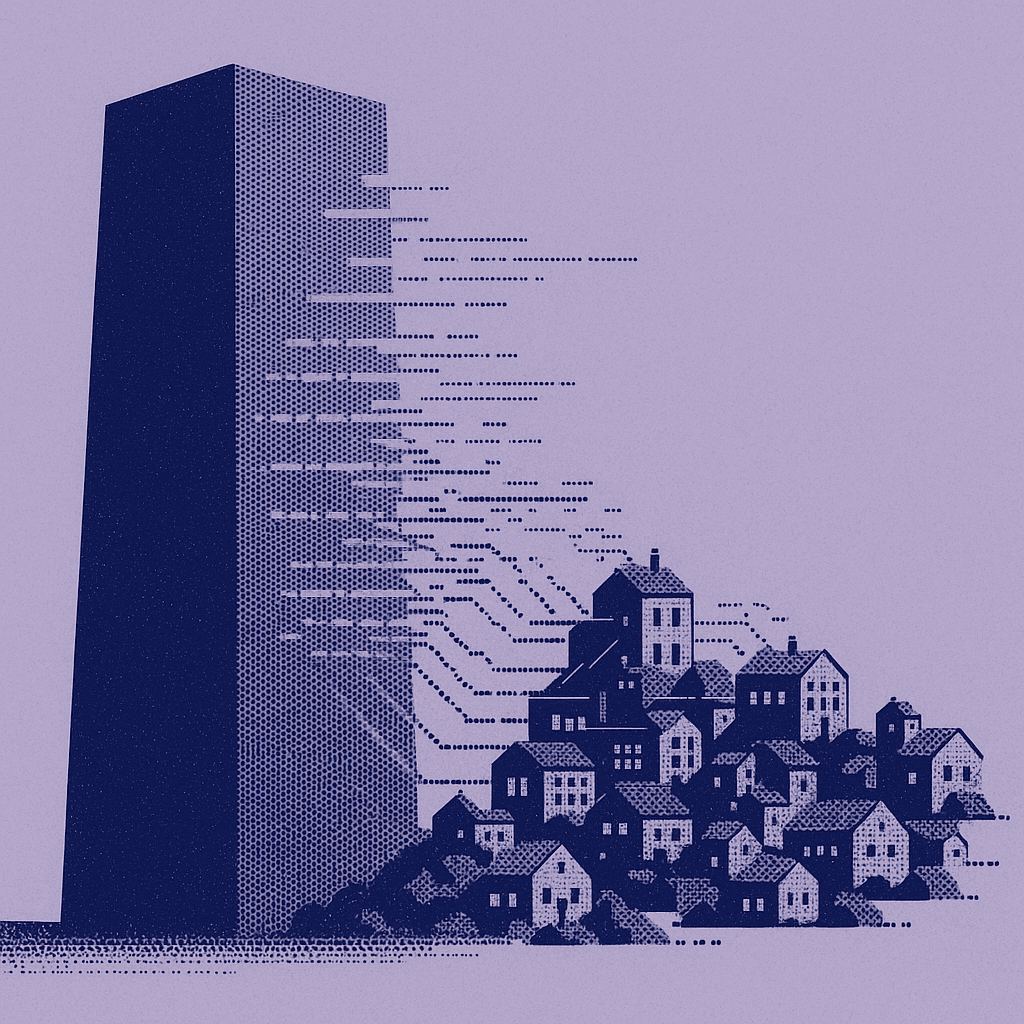

Enterprise monolith decomposition fails in 60% of attempts because manual dependency analysis cannot scale to codebases exceeding 500K lines across distributed teams. This guide demonstrates 15 production-validated AI acceleration patterns using Graph Neural Networks for boundary detection and automated risk assessment—reducing migration timelines 40-60% across Fortune 500 implementations.

Enterprise implementations of AI-assisted migration approaches over the past two years reveal patterns that dramatically accelerate decomposition while preserving production stability. These implementations consistently demonstrate that the critical breakthrough occurs when teams treat migration not as a technical problem, but as a knowledge extraction challenge requiring AI-powered pattern recognition.

Enterprise teams consistently face this challenge: legacy monoliths with circular dependencies, distributed knowledge across multiple time zones, and constant pressure to move faster without breaking production. A Senior Platform Engineer at a Fortune 500 financial services company managing a 2.3M line Java monolith supporting 847 microservices migration targets described the process as complex and risky, likening it to digital archaeology where careful excavation is required to avoid destabilizing the system.

The problem isn't monolith complexity. It's the information asymmetry between what needs to be decomposed and what AI agents can actually analyze at enterprise scale. Traditional approaches fail because they treat migration as a technical problem when it's fundamentally a knowledge extraction challenge requiring AI-powered pattern recognition.

Production-ready AI migration tooling remains limited, creating a significant market gap that requires hybrid approaches combining emerging AI capabilities with established migration patterns.

These 15 tactics deliver measurable migration acceleration while protecting production stability.

1. AI-Powered Domain Modeling & Service Boundary Detection

Large-context AI agents equipped with enterprise-scale analysis capabilities scan monoliths to identify optimal service boundaries using approaches like Graph Neural Networks combined with Domain-Driven Design principles.

Technical approach

VAE-GNN algorithms perform static code analysis, generating comprehensive dependency graphs with probabilistic class-to-microservice assignment. This addresses the critical research gap where traditional approaches rely on manual domain expertise.

Example configuration approach

Resource requirements

Appropriate compute resources for enterprise analysis

Common failure modes

- False boundaries on utility classes: Shared utilities get incorrectly flagged as separate domains

- Over-clustering of similar entities: Related business objects split across multiple services

- Confidence degradation with legacy code: Pre-2010 codebases without clear separation patterns typically show reduced accuracy vs. modern architectures

AI-powered domain modeling tools show promise for automated boundary detection, with several emerging solutions focusing on dependency analysis and clustering approaches.

2. Automated Codebase Dependency Mapping

Graph-based dependency analysis generates interactive call graphs that feed directly into Strangler Fig, Parallel Run, and Branch-by-Abstraction migration patterns, solving the prerequisite inventory challenge.

Teams typically spend significant migration planning time manually tracing dependencies. Automated analysis reduces this to hours while capturing runtime dependencies invisible to static analysis.

Example implementation approach

Risk assessment integration

- Critical path identification: Modules with high inbound dependencies flagged for careful migration planning

- Circular dependency detection: Automated identification of problematic coupling requiring refactoring before decomposition

3. Agent-Led Strangler Fig Candidate Extraction

AI-assisted tools can help identify potential low-risk Strangler Fig entry points by analyzing API endpoints and code structure, and support the scaffolding of proxy layers for gradual transition, but human oversight and validation remain essential to maintain production stability.

The Strangler Fig pattern advocates for gradual transition, but manual identification of safe entry points requires weeks of analysis. AI acceleration reduces this to hours through automated risk scoring.

Sample proxy implementation pattern

Critical failure modes

- State synchronization gaps: Shared database state between old and new services creates consistency issues

- Transaction boundary violations: Business transactions spanning multiple services require saga pattern implementation

4. Intelligent Data Access Pattern Analysis

AI can spot CRUD hot spots, shared tables, and suggest sharding strategies or CDC patterns to solve database decomposition and integrity challenges identified in migration planning.

Data migration represents the highest-risk component of monolith decomposition. AI analysis reduces planning time from weeks to days while identifying potential consistency violations before they impact production.

Sample schema decomposition pattern

Pattern analysis capabilities

- CRUD hot spot identification: High-traffic tables flagged for read replica strategies

- Cross-service transaction detection: Business workflows spanning multiple service boundaries mapped for saga pattern implementation

5. Automated Test Coverage Gap Finder

Comprehensive testing strategies require identifying critical paths without coverage. AI agents scan unit, integration, and E2E tests, flagging high-risk code paths that lack validation before decomposition begins.

Example configuration approach

Risk prioritization criteria

- High-complexity, low-coverage paths: Code with cyclomatic complexity >10 and <50% test coverage

- Frequent change patterns: Modules modified in >20% of recent commits without corresponding test updates

6. Contract Testing Auto-Generation

AI generates Pact or Spring Cloud Contract tests from API definitions, ensuring service compatibility as boundaries shift during decomposition.

Manual contract creation represents weeks of engineering effort per service boundary. AI generation reduces this to minutes while maintaining coverage standards.

Example contract generation

7. Parallel Run Orchestration & Diff Analysis

AI coordinates parallel execution of old monolith logic versus new microservice implementations, comparing outputs in real-time to detect behavioral regressions invisible to traditional testing.

Sample parallel run configuration

Failure detection patterns

- Response structure divergence: Field additions/removals between implementations

- Timing-dependent logic: Race conditions exposed only under production load

8. Smart API Gateway Configuration

AI auto-configures routing rules, rate limits, and circuit breakers for new microservices based on historical monolith traffic patterns, preventing common production failures.

Example gateway configuration

9. Canary Deployment Intelligence

AI determines optimal canary rollout speeds by analyzing error rates, latency changes, and business metrics correlation, automatically progressing or rolling back deployments.

Example canary configuration

10. Performance Regression Detection

AI establishes baseline performance profiles from monolith behavior, comparing new microservice performance to flag potential regressions before production deployment.

Implementation approach

11. CI/CD Pipeline Auto-Generation

AI scaffolds complete CI/CD pipelines for new microservices, including build, test, security scanning, and deployment stages tailored to technology stack and organizational requirements.

Example pipeline generation

12. Smart Observability & Alert Baseline Generation

AI agents auto-instrument new microservices with distributed tracing, establish SLO baselines from historical monolith performance data, and configure intelligent alerting that suppresses noise while highlighting real issues.

Example observability configuration

Alert suppression intelligence

- Correlated failure filtering: Single upstream failure doesn't trigger alerts for all downstream services

- Deployment window muting: Automated alert suppression during scheduled maintenance

13. Knowledge-Driven Developer Onboarding

Chat agents answer repository questions and supply architecture diagrams on demand, accelerating developer onboarding from months to weeks while reducing senior engineer mentorship overhead.

Example development assistant implementation

Onboarding acceleration benefits

- Code comprehension time: Modest improvement with AI onboarding compared to manual processes

- Senior engineer mentorship reduction: Significant decrease in architectural questions requiring senior intervention

14. Continuous Migration Progress Dashboards

AI agents aggregate pull request velocity, test pass rates, and service adoption metrics into real-time dashboards, providing engineering management visibility into ROI progress and migration timeline accuracy.

Sample progress tracking implementation

Key performance indicators tracked

- Service decomposition progress: Services in production vs. planned timeline

- Code migration velocity: Lines of code migrated per sprint with trend analysis

- DORA metrics integration: Deployment frequency, lead time, change failure rate, recovery time

15. Post-Migration Drift Detection & Tech-Debt Radar

AI continuously monitors for anti-patterns like tight coupling and schema drift, flagging technical debt accumulation early to prevent architecture degradation after successful migration completion.

Example drift detection implementation

Technical debt categorization

- Critical (address within 1 sprint): Security vulnerabilities, data consistency violations

- High (address within 1 month): Performance degradation, tight coupling introduction

- Medium (address within 1 quarter): Code quality issues, documentation gaps

Decision Framework

Choose AI-driven tactics based on migration constraints:

If codebase >500K LOC and team >20 engineers:

- Prioritize tactics 1-4 (domain modeling, dependency mapping, boundary detection)

If production uptime SLA >99.9%:

- Focus on tactics 7, 9, 10 (parallel run, canary deployment, performance prediction)

If team has <6 months microservices experience:

- Emphasize tactics 13-15 (developer onboarding, progress tracking, drift detection)

If regulatory/compliance requirements exist:

- Prioritize tactics 6, 11, 12 (contract testing, CI/CD automation, observability)

What You Should Do Next

AI acceleration transforms monolith decomposition from archaeological excavation into systematic engineering.

Implement automated dependency mapping (tactic #2) on a 10K LOC subset of the codebase using the provided Python script, measure analysis completion time, and establish baseline coupling metrics for migration planning.

Frequently Asked Questions

Written by

Molisha Shah

GTM and Customer Champion