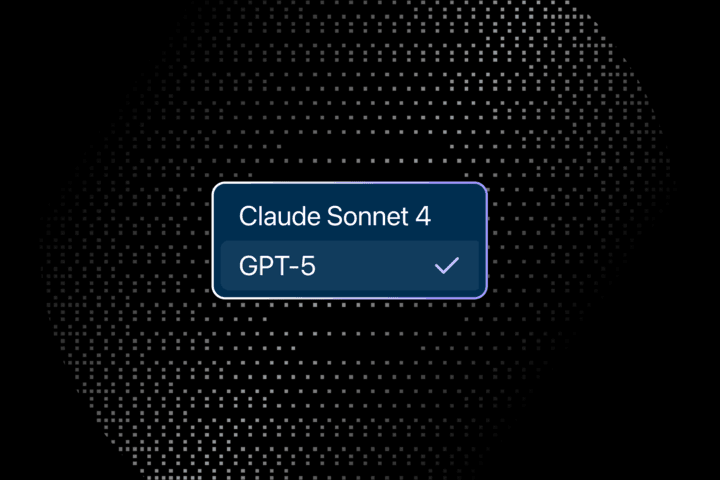

With the GPT-5 announcement today, we’re excited to bring it into Augment. Starting today, and rolling out gradually to everyone, you can now choose between two models in Augment:

- Claude Sonnet 4 – still the default

- OpenAI GPT-5 – now available via the new model picker

This is the first time we’ve run two models side by side in production. It follows weeks of controlled internal testing — the closest head-to-head we’ve ever seen.

Model Comparison: Sonnet 4 vs. GPT-5

We spent the past few weeks testing both models on the same set of coding tasks: single-file edits, multi-file refactors, test generation, and bug fixes across large repositories.

⚠️ Note: These tests used Claude Sonnet 4 with reasoning mode disabled. We’re planning a follow-up comparison with reasoning mode enabled, which may impact relative performance on multi-step or large-context tasks.

| Metric / Dimension | Claude Sonnet 4 | GPT-5 |

|---|---|---|

| Preference rate | ~44% | ~47% |

| Tie rate | 4% | 4% |

| Single-file edits | More direct; fewer tangential suggestions | Occasionally verbose; more context framing |

| Multi-file changes | Handles well but sometimes misses cross-file dependencies | Stronger cross-file reasoning; better dependency resolution |

| Refactor complexity | Faster on small/mid-size changes | Handles larger changes with more caution and explicit validation |

| Code quality comments | Concise, focused on the main change | More thorough; includes edge-case coverage |

| Failure modes | Occasional under-specification on complex changes | Occasional over-explanation and slower iteration |

*Preference rate indicates how often users preferred one model’s output over the other when shown both responses to the same prompt and code state.

Observations From Testing

Both models perform as excellent coding agents within Augment’s product, with different tradeoffs.

- Speed vs. Thoroughness – Sonnet 4 returns faster, more direct responses and is likely to make more assumptions in order to complete the task faster. GPT-5 takes longer and makes more tool calls, but surfaces more detailed reasoning — and is more likely to ask clarifying questions when something’s ambiguous.

- Use Sonnet for: quicker answers, more targeted edits, or when speed and decisiveness matter.

- Use GPT-5 for: complex debugging, cross-file refactors, or when you want caution, completeness, or thoroughness.

- Consistency Across Tasks – No single model won outright. Tester preferences clustered clearly: some preferred Sonnet for speed, while others chose GPT-5 for completeness.

- Use Sonnet for: fast iteration and review cycles.

- Use GPT-5 for: writing robust code with edge-case handling.

- Scaling With Context – GPT-5 performed better in large-context scenarios, especially when changes spanned multiple files and required understanding project-wide constraints.

Why We’re Shipping a Picker

We’ve long said we wouldn’t ship a model picker — and for good reason. Our goal has always been to abstract away the complexity of LLMs and let users focus on getting work done, not choosing engines.

However, for the first time, we have two models — Claude Sonnet 4 and GPT-5 — that both deliver high-quality outputs while occupying different points on the latency-vs-quality spectrum. Rather than declaring a “winner,” we’re giving you optionality where it matters:

- Thoroughness vs. speed – Some users prefer precision and edge case coverage; others want to iterate quickly. Now you can choose based on the task.

- Fallback resilience – If one provider experiences latency or quality drift, you can switch models with zero workflow changes.

- Signal for tuning – Seeing how and when users switch gives us valuable insight for future routing, prompt optimization, and agent behavior.

What’s Next

We’ll be monitoring:

- Usage distribution between models

- Task types where GPT-5 adoption spikes

- Latency trends and failure modes over time

Sonnet 4 remains Augment’s default. GPT-5 is there when you want a different approach. Both will keep evolving — and your feedback will be valuable in shaping the next round of tuning.

Written by

Molisha Shah

GTM and Customer Champion