The Problem: Manual Ticket Management at Scale

Our team was spending too much time manually assigning and labeling tickets - several hours daily just triaging customer feedback instead of solving actual problems. Raw customer reports were often vague ("the extension doesn't work"), contained multiple unrelated issues, or were duplicates of existing problems. With inconsistent labeling and poor organization, tickets were getting misrouted and duplicated, leading to slow response times while engineers burned cycles on categorization rather than building solutions.

Augment: More Than Just a Coding Assistant

We recently launched Augment's CLI agent - a new capability that extends our AI coding assistant beyond the IDE into full workflow automation. What we needed wasn't just text analysis - we needed something that could understand how customer issues connected to our code and recent changes to the code. The CLI agent brings that same codebase awareness through our context engine, so it understands not just what customers are saying, but how their issues relate to our actual implementation.

More importantly, this new CLI agent has built-in tool integration with systems like Linear, GitHub, and Confluence. This means it can not only analyze tickets with full context from our codebase and documentation, but also take action - updating labels, creating sub-tickets, posting responses, and moving items through workflows. It's the difference between getting AI recommendations and having AI actually do the work.

The Solution: A 3-Agent Pipeline

Instead of building complex ML classifiers or rule-based systems, we used Augment's CLI agent to create three specialized AI agents that work together:

1. Labelling Agent

The first agent transforms messy customer feedback into clean, structured tickets. It rewrites unclear descriptions into actionable problem statements and splits tickets containing multiple issues into separate, focused tickets.

For example, it might take a vague title like "Login broken" and enhance it to "Google SSO authentication fails in VSCode" while preserving the customer's original description. The agent applies consistent labels for bug type, product area, and priority, giving us the structure we desperately needed.

2. Support Agent

This agent tackles the technical support workload by researching similar historical tickets and diving into our codebase to understand the underlying issues. We built in a conservative approach - it only responds when it has high confidence in the solution. When it does respond, it provides step-by-step solutions with version-specific guidance. It's better to skip uncertain cases than provide speculative solutions that might send customers down the wrong path.

3. Triage Agent

The final agent focuses on volume reduction and prioritisation. It effectively detects and closes duplicate tickets, consolidates all the useful information into a master ticket, and creates associations between related tickets. It assigns priorities using a consistent tier system (from 🔥 Urgent down to 📝 Low priority).

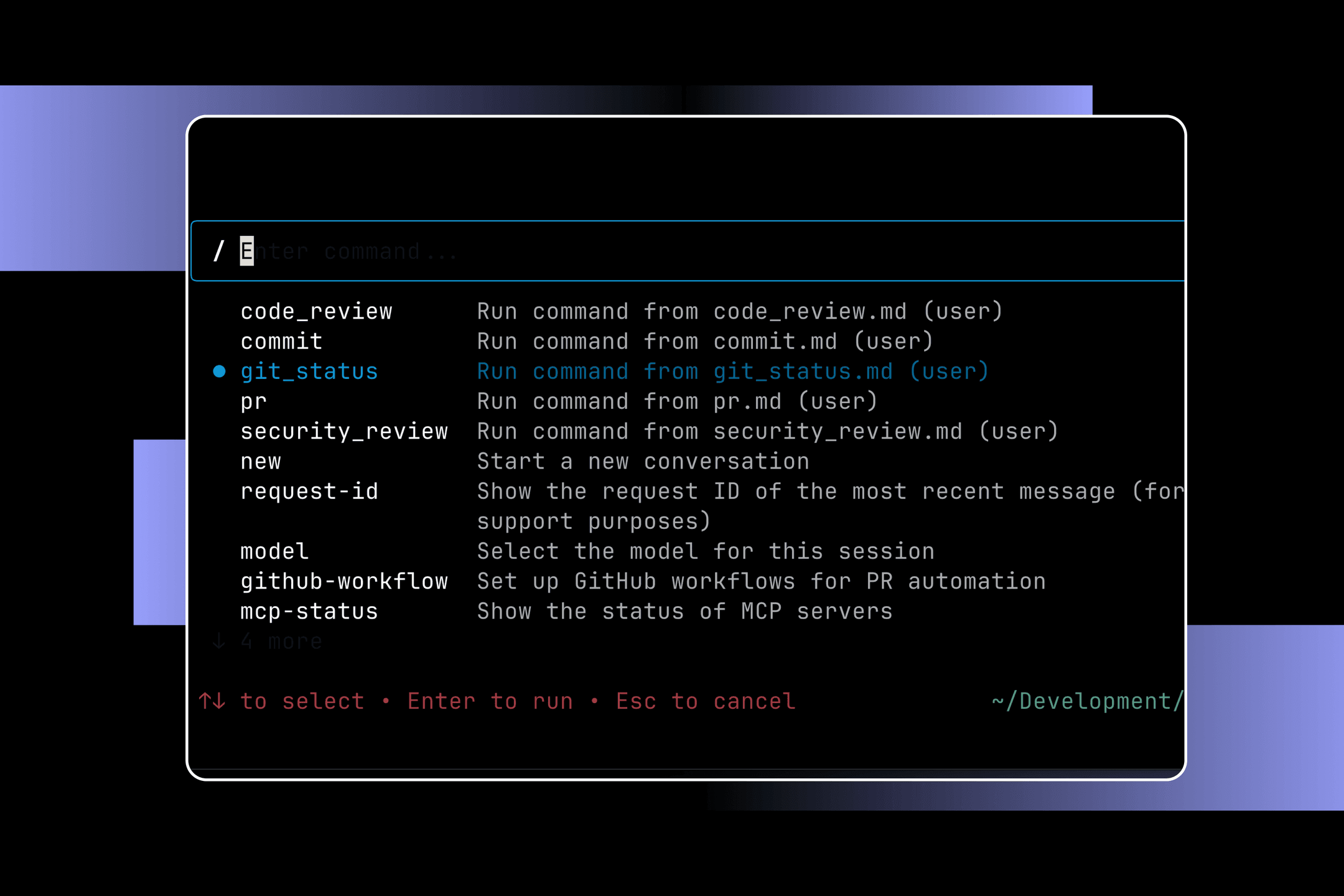

Implementation: Simple CLI Commands

Each agent runs with a straightforward command - these are simplified versions of our actual prompts, but they give you a sense of what we're doing:

# Labelling automation (runs hourly)

auggie --print --instruction-file labelling-prompt.md# Support automation (runs hourly)

auggie --print --instruction-file support-prompt.md

# Triage automation (runs daily)

auggie --print --instruction-file tirage-prompt.md

The agents use Augment's built-in Linear integration, codebase search, and historical context to make informed decisions.

We set up GitHub workflows to call these agents automatically - labelling and support run hourly, while triage runs daily. The pipeline processes tickets in sequence, ensuring each stage has clean input from the previous one.

Results: Dramatic Improvement

We've seen meaningful improvements across the board. Our daily triage time dropped from several hours to just minutes, and tickets that the support agent handles get responses within hours instead of days. The quality gains have been equally important - labelling is now consistent across all tickets, and our duplicate detection catches far more redundant issues than manual review ever did.

The biggest change has been in how our team operates. Instead of spending time on routine categorization, we focus on complex decisions and strategic problem-solving. We now have consistent ticket structure and better visibility into emerging customer issues. Our engineering team gets clear, organized insights into user problems, which helps guide product decisions more effectively than the scattered feedback we had before.

Key Lessons Learned

1. Start Conservative: High confidence thresholds prevent bad automation

2. Preserve Context: Never lose the customer's original voice

3. Human-AI Collaboration: Augment expertise, don't replace it

4. Iterate Quickly: Started with one agent, evolved to three-agent pipeline

Beyond Feedback: The Bigger Picture

We're already applying this approach to other SDLC pain points. Release management is an obvious next target - agents could monitor PRs and wiki pages to track feature progress and automatically identify when features are actually released to users. Documentation is another huge opportunity - imagine agents that understand code changes and can translate them into user-facing usage guides and API documentation without human intervention. We're also looking at incident response, where agents could perform initial triage and cause analysis to automatically assess severity and notify the right teams.

Getting Started

1. Identify your manual time sinks (probably not coding!)

Look for repetitive tasks that eat up hours but require understanding context and making decisions. Customer support, ticket triage, documentation updates, and release tracking are perfect candidates - they need human-like reasoning but don't need human creativity. Ask your team: "What takes forever but feels like it shouldn't?"

2. Start with one simple AI automation using existing tools

Don't build custom infrastructure - use AI tools you already have access to. If you're using Augment, try the CLI agent with a simple prompt. The initial goal is proving that AI can understand your context and make good decisions, not building the perfect system.

3. Measure before and after to prove value

Track concrete metrics before you start: time spent, error rates, team satisfaction. Even rough estimates help. After automation, measure the same things. You'll need the data to support expanding the approach.

4. Iterate based on real usage patterns

Your first attempt won't be perfect, and that's fine. Watch how the automation actually gets used, where it breaks down, and what edge cases emerge. We started with one agent and evolved to three based on what we learned. Expect to refine your approach as you go.

The future of software development isn't just about writing code faster—it's about automating as many parts of the lifecycle as we can so humans can focus on what they do best: solving complex problems and making strategic decisions.

Written by

Andre Chang

Andre is a Solutions Architect at Augment Code. He has previously spent 25 years as a software engineer in a variety of enterprises, mostly working on high performance real-time systems. When not at the computer, you can find him in the backcountry or traveling to different corners of the world. He does not have a cat.